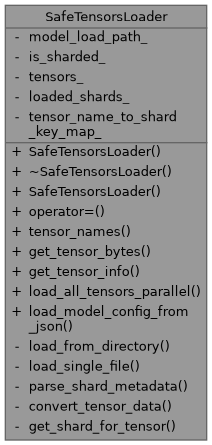

Main class for loading tensors from SafeTensors format files (single or sharded) More...

#include <safetensors_loader.h>

Classes | |

| struct | TensorInfo |

| Information about a tensor stored in the SafeTensors file(s) More... | |

Public Member Functions | |

| SafeTensorsLoader (const std::string &model_load_path) | |

| Constructs a SafeTensorsLoader. | |

| ~SafeTensorsLoader () | |

| Destructor. Cleans up all memory-mapped shards. | |

| SafeTensorsLoader (const SafeTensorsLoader &)=delete | |

| SafeTensorsLoader & | operator= (const SafeTensorsLoader &)=delete |

| std::vector< std::string > | tensor_names () const |

| Get a list of all tensor names available in the loaded model. | |

| std::vector< uint8_t > | get_tensor_bytes (const std::string &name) const |

| Get the raw bytes for a tensor, converting to FP32 if needed. | |

| const TensorInfo & | get_tensor_info (const std::string &name) const |

| Get information about a specific tensor. | |

| std::map< std::string, std::vector< uint8_t > > | load_all_tensors_parallel () const |

| Load all tensors in parallel. | |

Static Public Member Functions | |

| static bool | load_model_config_from_json (const std::string &model_path_or_dir, ModelConfig &config_to_populate) |

| Loads model configuration from a JSON file corresponding to a .safetensors model path. | |

Private Member Functions | |

| void | load_from_directory (const std::string &directory_path) |

| Load tensors from a directory, handling index files and multiple shards. | |

| void | load_single_file (const std::string &file_path, const std::string &shard_key_override="") |

| Load a single .safetensors file as a shard. | |

| void | parse_shard_metadata (Shard &shard, const std::string &shard_key) |

| Parse the metadata of a shard and populate tensor information. | |

| std::vector< uint8_t > | convert_tensor_data (const uint8_t *data, size_t size, const std::string &dtype) const |

| Convert raw tensor data to FP32 if needed. | |

| const Shard * | get_shard_for_tensor (const std::string &tensor_name) const |

| Get the Shard object for a given tensor name. | |

Private Attributes | |

| std::string | model_load_path_ |

| bool | is_sharded_ = false |

| std::map< std::string, TensorInfo > | tensors_ |

| std::map< std::string, std::unique_ptr< Shard > > | loaded_shards_ |

| std::map< std::string, std::string > | tensor_name_to_shard_key_map_ |

Detailed Description

Main class for loading tensors from SafeTensors format files (single or sharded)

Supports both single-file and multi-shard (sharded) SafeTensors models. Handles memory mapping, tensor metadata parsing, and provides efficient access to tensor data. Can load models from a single .safetensors file, a directory containing multiple shards, or a directory with an index file.

Definition at line 120 of file safetensors_loader.h.

Constructor & Destructor Documentation

◆ SafeTensorsLoader() [1/2]

|

explicit |

Constructs a SafeTensorsLoader.

The path can be to a single .safetensors file, or a directory containing .safetensors file(s) and potentially an index.json.

- Parameters

-

model_load_path Path to the model file or directory.

- Exceptions

-

std::runtime_error if files cannot be opened, are invalid, or sharding info is inconsistent.

Definition at line 283 of file safetensors_loader.cpp.

References Logger::info(), is_sharded_, load_from_directory(), load_single_file(), loaded_shards_, model_load_path_, tensors_, and Logger::warning().

◆ ~SafeTensorsLoader()

| SafeTensorsLoader::~SafeTensorsLoader | ( | ) |

Destructor. Cleans up all memory-mapped shards.

Definition at line 312 of file safetensors_loader.cpp.

References Logger::info(), and loaded_shards_.

◆ SafeTensorsLoader() [2/2]

|

delete |

Member Function Documentation

◆ convert_tensor_data()

|

private |

Convert raw tensor data to FP32 if needed.

Handles conversion from F16/BF16 to FP32 as required by the tensor's dtype.

- Parameters

-

data Pointer to the raw tensor data. size Size of the data in bytes. dtype Data type string (e.g., "F32", "F16", "BF16").

- Returns

- Converted tensor data as a vector of bytes (FP32 format).

Definition at line 580 of file safetensors_loader.cpp.

References cpu_bf16_to_float32(), and cpu_f16_to_float32().

Referenced by get_tensor_bytes().

◆ get_shard_for_tensor()

|

private |

Get the Shard object for a given tensor name.

Looks up the shard key for the tensor and returns a pointer to the corresponding Shard.

- Parameters

-

tensor_name Name of the tensor.

- Returns

- Pointer to the Shard containing the tensor.

- Exceptions

-

std::logic_error if the shard is not found.

Definition at line 514 of file safetensors_loader.cpp.

References get_tensor_info(), loaded_shards_, and tensor_name_to_shard_key_map_.

Referenced by get_tensor_bytes().

◆ get_tensor_bytes()

| std::vector< uint8_t > SafeTensorsLoader::get_tensor_bytes | ( | const std::string & | name | ) | const |

Get the raw bytes for a tensor, converting to FP32 if needed.

- Parameters

-

name Name of the tensor to load.

- Returns

- Vector of bytes containing the tensor data (FP32 format).

- Exceptions

-

std::runtime_error if tensor not found or conversion fails.

Definition at line 536 of file safetensors_loader.cpp.

References convert_tensor_data(), SafeTensorsLoader::TensorInfo::data_offset, SafeTensorsLoader::TensorInfo::dtype, get_shard_for_tensor(), get_tensor_info(), Shard::get_tensor_raw_data(), and SafeTensorsLoader::TensorInfo::nbytes.

◆ get_tensor_info()

| const SafeTensorsLoader::TensorInfo & SafeTensorsLoader::get_tensor_info | ( | const std::string & | name | ) | const |

Get information about a specific tensor.

- Parameters

-

name Name of the tensor.

- Returns

- Reference to the tensor's information.

- Exceptions

-

std::runtime_error if tensor not found.

Definition at line 506 of file safetensors_loader.cpp.

References tensors_.

Referenced by get_shard_for_tensor(), and get_tensor_bytes().

◆ load_all_tensors_parallel()

| std::map< std::string, std::vector< uint8_t > > SafeTensorsLoader::load_all_tensors_parallel | ( | ) | const |

Load all tensors in parallel.

- Returns

- Map of tensor names to their data (FP32 format).

Definition at line 544 of file safetensors_loader.cpp.

References Logger::debug(), Logger::error(), Logger::info(), ThreadPool::submit(), and tensors_.

◆ load_from_directory()

|

private |

Load tensors from a directory, handling index files and multiple shards.

If an index file is found, parses it and loads the referenced shards. Otherwise, scans for .safetensors files and loads them as individual shards.

- Parameters

-

directory_path Path to the directory containing model files.

Definition at line 318 of file safetensors_loader.cpp.

References Logger::debug(), Logger::info(), is_sharded_, load_single_file(), loaded_shards_, tensor_name_to_shard_key_map_, and Logger::warning().

Referenced by SafeTensorsLoader().

◆ load_model_config_from_json()

|

static |

Loads model configuration from a JSON file corresponding to a .safetensors model path.

Given the path to a .safetensors model or directory, this method attempts to find a "config.json" in the same directory. If found, it parses the JSON and populates the provided ModelConfig object.

- Parameters

-

model_path_or_dir Path to the .safetensors model file or directory. config_to_populate Reference to a ModelConfig object to be filled.

- Returns

- True if config.json was found and successfully parsed, false otherwise.

Definition at line 607 of file safetensors_loader.cpp.

References ModelConfig::architecture, ModelConfig::bos_token_id, ModelConfig::eos_token_id, Logger::error(), ModelConfig::hidden_size, Logger::info(), ModelConfig::intermediate_size, ModelConfig::is_gguf_file_loaded, ModelConfig::LLAMA3_TIKTOKEN, ModelConfig::LLAMA_SENTENCEPIECE, ModelConfig::max_position_embeddings, ModelConfig::model_name, ModelConfig::num_attention_heads, ModelConfig::num_hidden_layers, ModelConfig::num_key_value_heads, ModelConfig::pad_token_id, ModelConfig::rms_norm_eps, ModelConfig::rope_theta, ModelConfig::tokenizer_family, ModelConfig::unk_token_id, ModelConfig::UNKNOWN, ModelConfig::vocab_size, and Logger::warning().

Referenced by TinyLlamaModel::TinyLlamaModel(), and tinyllama::TinyLlamaSession::TinyLlamaSession().

◆ load_single_file()

|

private |

Load a single .safetensors file as a shard.

Memory-maps the file and parses its metadata to populate tensor information.

- Parameters

-

file_path Path to the .safetensors file. shard_key_override Optional key to use for this shard (e.g., filename).

Definition at line 413 of file safetensors_loader.cpp.

References Logger::debug(), Logger::info(), loaded_shards_, and parse_shard_metadata().

Referenced by load_from_directory(), and SafeTensorsLoader().

◆ operator=()

|

delete |

◆ parse_shard_metadata()

|

private |

Parse the metadata of a shard and populate tensor information.

Reads the metadata JSON from the shard and adds entries to the tensors_ map.

- Parameters

-

shard Reference to the Shard object. shard_key Key identifying this shard (e.g., filename).

Definition at line 431 of file safetensors_loader.cpp.

References SafeTensorsLoader::TensorInfo::data_offset, Logger::debug(), SafeTensorsLoader::TensorInfo::dtype, Shard::file_path, Shard::metadata_ptr, Shard::metadata_size, SafeTensorsLoader::TensorInfo::name, SafeTensorsLoader::TensorInfo::nbytes, SafeTensorsLoader::TensorInfo::shape, SafeTensorsLoader::TensorInfo::shard_key, tensor_name_to_shard_key_map_, tensors_, and Logger::warning().

Referenced by load_single_file().

◆ tensor_names()

| std::vector< std::string > SafeTensorsLoader::tensor_names | ( | ) | const |

Get a list of all tensor names available in the loaded model.

- Returns

- Vector of tensor names.

Definition at line 497 of file safetensors_loader.cpp.

References tensors_.

Member Data Documentation

◆ is_sharded_

|

private |

True if model is loaded from multiple shard files

Definition at line 195 of file safetensors_loader.h.

Referenced by load_from_directory(), and SafeTensorsLoader().

◆ loaded_shards_

|

private |

Map of shard keys (e.g., filenames) to Shard objects

Definition at line 198 of file safetensors_loader.h.

Referenced by get_shard_for_tensor(), load_from_directory(), load_single_file(), SafeTensorsLoader(), and ~SafeTensorsLoader().

◆ model_load_path_

|

private |

Original path provided to constructor (file or directory)

Definition at line 194 of file safetensors_loader.h.

Referenced by SafeTensorsLoader().

◆ tensor_name_to_shard_key_map_

|

private |

Definition at line 202 of file safetensors_loader.h.

Referenced by get_shard_for_tensor(), load_from_directory(), and parse_shard_metadata().

◆ tensors_

|

private |

Global map of tensor names to their comprehensive info

Definition at line 197 of file safetensors_loader.h.

Referenced by get_tensor_info(), load_all_tensors_parallel(), parse_shard_metadata(), SafeTensorsLoader(), and tensor_names().

The documentation for this class was generated from the following files: