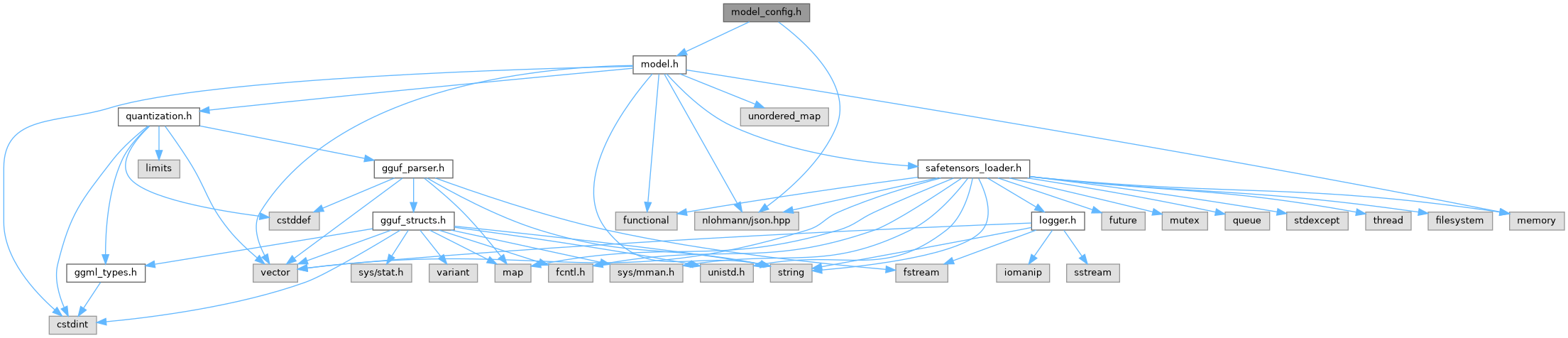

Include dependency graph for model_config.h:

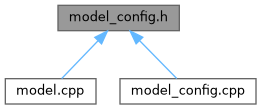

This graph shows which files directly or indirectly include this file:

Go to the source code of this file.

Functions | |

| ModelConfig | parse_model_config (const nlohmann::json &json) |

| ModelConfig | parse_model_config_from_gguf (const GGUFData &gguf) |

Function Documentation

◆ parse_model_config()

| ModelConfig parse_model_config | ( | const nlohmann::json & | json | ) |

Definition at line 20 of file model_config.cpp.

20 {

21 ModelConfig cfg;

37

38 // Infer Architecture if available

39 if (json.contains("architectures") && json["architectures"].is_array() && !json["architectures"].empty()) {

40 // Take the first architecture string if multiple are listed

42 } else {

44 }

45 cfg.model_name = json.value("model_type", cfg.architecture); // Use model_type or fallback to architecture

46

47

48 Logger::info("[parse_json_config] Inferring tokenizer family for SafeTensors. Arch: '" + cfg.architecture + "', Vocab: " + std::to_string(cfg.vocab_size));

50 bool is_llama3_arch_hint_json = (cfg.architecture.find("LlamaForCausalLM") != std::string::npos && // Llama 3 often uses this

52

53 if (is_llama3_vocab_size_json && is_llama3_arch_hint_json) {

55 Logger::info("[parse_json_config] Result: Identified LLAMA3_TIKTOKEN (vocab size + arch hint).");

57 float llama3_rope_candidate = json.value("rope_theta", 500000.0f); // Check rope_theta in config.json

58 if (llama3_rope_candidate > 10000.0f) {

59 cfg.rope_theta = llama3_rope_candidate;

60 Logger::info("[parse_json_config] Adjusted rope_theta to " + std::to_string(cfg.rope_theta) + " for Llama 3 model (was 10000.0).");

61 }

62 }

63 } else if (cfg.vocab_size == 32000 || cfg.architecture.find("Llama") != std::string::npos) { // Common for Llama 1/2/TinyLlama

65 Logger::info("[parse_json_config] Result: Identified LLAMA_SENTENCEPIECE (vocab size or arch hint).");

66 } else {

69 }

70

71

72 return cfg;

73}

Model configuration structure holding architecture and hyperparameters.

Definition model.h:80

@ LLAMA3_TIKTOKEN

@ LLAMA_SENTENCEPIECE

◆ parse_model_config_from_gguf()

| ModelConfig parse_model_config_from_gguf | ( | const GGUFData & | gguf | ) |

Definition at line 75 of file model_config.cpp.

75 {

76 ModelConfig config;

78

79 auto get_meta_string = [&](const std::string& key,

80 const std::string& default_val) -> std::string {

83 std::holds_alternative<std::string>(it->second)) {

84 return std::get<std::string>(it->second);

85 }

86 return default_val;

87 };

88

89 auto get_meta_value = [&](const std::string& key, auto default_value) {

90 using TargetType = typename std::decay<decltype(default_value)>::type;

93 return std::visit(

94 [&](const auto& val) -> TargetType {

95 using T = std::decay_t<decltype(val)>;

96

97 if constexpr (std::is_integral_v<TargetType>) {

98 if constexpr (std::is_integral_v<T> && !std::is_same_v<T, bool>) {

99 if constexpr (std::is_unsigned_v<T> &&

100 std::is_signed_v<TargetType>) {

101 if (val > static_cast<std::make_unsigned_t<TargetType>>(

102 std::numeric_limits<TargetType>::max())) {

104 std::to_string(val) +

105 " overflows TargetType. Using default.");

106 return default_value;

107 }

108 }

109

110 else if constexpr (std::is_signed_v<T> &&

111 std::is_signed_v<TargetType> &&

112 sizeof(T) > sizeof(TargetType)) {

113 if (val > static_cast<T>(

114 std::numeric_limits<TargetType>::max()) ||

115 val < static_cast<T>(

116 std::numeric_limits<TargetType>::lowest())) {

118 std::to_string(val) +

119 " overflows TargetType. Using default.");

120 return default_value;

121 }

122 }

123 return static_cast<TargetType>(val);

124 }

125 } else if constexpr (std::is_floating_point_v<TargetType>) {

126 if constexpr (std::is_floating_point_v<T>) {

127 return static_cast<TargetType>(val);

128 }

129 } else if constexpr (std::is_same_v<TargetType, bool>) {

130 if constexpr (std::is_same_v<T, bool>) {

131 return val;

132 }

133 } else if constexpr (std::is_same_v<TargetType, std::string>) {

134 if constexpr (std::is_same_v<T, std::string>) {

135 return val;

136 }

137 }

139 "' has stored type incompatible with requested "

140 "TargetType. Using default.");

141 return default_value;

142 },

143 it->second);

144 } else {

145 return default_value;

146 }

147 };

148

150 get_meta_value("llama.vocab_size", 32000));

156 config.num_attention_heads);

159 config.max_position_embeddings > 8192) {

161 std::to_string(config.max_position_embeddings) +

162 ", overriding to sensible default (2048)");

163 config.max_position_embeddings = 2048;

164 }

165 config.rms_norm_eps =

166 get_meta_value("llama.attention.layer_norm_rms_epsilon", 1e-5f);

173

178

181 ", Has Merges: " + (has_merges ? "Yes" : "No"));

182

183

189 std::string ggml_tokenizer_model = get_meta_string("tokenizer.ggml.model", "");

190 bool is_tiktoken_style_tokenizer_model = (ggml_tokenizer_model == "gpt2");

191

192 Logger::info("[parse_gguf_config] L3 Hints: arch_hint=" + std::string(is_llama3_arch_hint ? "Y":"N") +

193 ", vocab_size_match=" + std::string(is_llama3_vocab_size ? "Y":"N") +

194 ", has_merges=" + std::string(has_merges ? "Y":"N") +

195 ", ggml_tokenizer_model_key='" + ggml_tokenizer_model + "' (is_tiktoken_style: " + std::string(is_tiktoken_style_tokenizer_model ? "Y":"N") + ")" );

196

197 if (has_merges && is_llama3_vocab_size && is_tiktoken_style_tokenizer_model) {

199 Logger::info("[parse_gguf_config] Result: Identified LLAMA3_TIKTOKEN (merges + vocab_size + ggml_tokenizer_model='gpt2'). Architecture string was: '" + config.architecture + "'");

201 Logger::info("[parse_gguf_config] Note: Classified as Llama 3 based on tokenizer/vocab, but arch string was 'llama'.");

202 }

204 float llama3_rope_candidate = get_meta_value("llama.rope.freq_base", 500000.0f);

205 if (llama3_rope_candidate > 10000.0f) {

206 config.rope_theta = llama3_rope_candidate;

207 Logger::info("[parse_gguf_config] Adjusted rope_theta to " + std::to_string(config.rope_theta) + " for Llama 3 model (was 10000.0).");

208 }

209 }

210 } else if (config.architecture == "llama" || config.architecture.find("Llama-2") != std::string::npos || config.architecture.find("TinyLlama") != std::string::npos) {

212 Logger::info("[parse_gguf_config] Result: Identified LLAMA_SENTENCEPIECE based on architecture: '" + config.architecture + "'");

213 } else {

215 Logger::info("[parse_gguf_config] Result: UNKNOWN tokenizer family for architecture: '" + config.architecture + "'");

216 }

217

218 // Existing chat_template_type and pre_tokenizer_type logic based on architecture and pre_key

224 } else {

228 "'.");

229 }

230

231 if (has_pre_key) {

232 config.pre_tokenizer_type =

233 get_meta_string("tokenizer.ggml.pre", "unknown");

236 } else {

238 }

243

246 Logger::info(

247 "Inferred chat_template_type='llama2' based on model_type and "

248 "missing/different pre_tokenizer_type.");

249 }

250

253 std::holds_alternative<std::string>(template_it->second)) {

254 config.chat_template_string = std::get<std::string>(template_it->second);

256

257 } else {

258 Logger::info(

259 "tokenizer.chat_template not found or not a string in metadata. Will "

260 "use fallback logic.");

262 }

266 Logger::info(

267 "Inferred chat_template_type='llama2' based on model name and "

268 "missing/different pre_tokenizer_type.");

270 Logger::info("Llama 3 model identified. Chat template will primarily rely on 'tokenizer.chat_template' from GGUF if present.");

271 // Set a generic type for now, actual application will use the string.

274 } else {

276 Logger::warning("Llama 3 model detected, but 'tokenizer.chat_template' not found in GGUF metadata.");

277 }

278 }

279 }

280

283 (config.tokenizer_family == ModelConfig::TokenizerFamily::LLAMA_SENTENCEPIECE ? "L2_SPM" : "UNKNOWN")));

284 return config;

285}