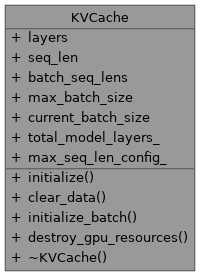

Complete Key-Value cache for all transformer layers. More...

#include <model.h>

Public Member Functions | |

| void | initialize (const ModelConfig &config, int total_num_model_layers, int num_gpu_layers_to_allocate, int max_seq_len_arg, int num_kv_heads, int head_dim, int max_batch_size_arg=1) |

| Initializes the KV cache with given dimensions. | |

| void | clear_data () |

| void | initialize_batch (int batch_size) |

| Initialize batch mode with specified number of sequences. | |

| void | destroy_gpu_resources () |

| ~KVCache () | |

Public Attributes | |

| std::vector< KVCacheLayer > | layers |

| int | seq_len = 0 |

| std::vector< int > | batch_seq_lens |

| int | max_batch_size = 1 |

| int | current_batch_size = 0 |

| int | total_model_layers_ = 0 |

| int | max_seq_len_config_ = 0 |

Detailed Description

Complete Key-Value cache for all transformer layers.

Manages the KV cache across all layers of the transformer model, including memory management for both CPU and GPU implementations. Supports both single-sequence and multi-sequence batch processing.

Constructor & Destructor Documentation

◆ ~KVCache()

|

inline |

Definition at line 224 of file model.h.

References destroy_gpu_resources().

Member Function Documentation

◆ clear_data()

|

inline |

Definition at line 180 of file model.h.

References batch_seq_lens, current_batch_size, layers, and seq_len.

Referenced by tinyllama::TinyLlamaSession::generate(), and tinyllama::TinyLlamaSession::generate_batch().

◆ destroy_gpu_resources()

| void KVCache::destroy_gpu_resources | ( | ) |

Definition at line 217 of file kv_cache.cpp.

Referenced by ~KVCache().

◆ initialize()

| void KVCache::initialize | ( | const ModelConfig & | config, |

| int | total_num_model_layers, | ||

| int | num_gpu_layers_to_allocate, | ||

| int | max_seq_len_arg, | ||

| int | num_kv_heads, | ||

| int | head_dim, | ||

| int | max_batch_size_arg = 1 |

||

| ) |

Initializes the KV cache with given dimensions.

- Parameters

-

config The model configuration, used to determine if KVCache quantization is enabled. total_num_model_layers Total number of layers in the model (for sizing CPU cache vectors) num_gpu_layers_to_allocate Number of layers for which to allocate GPU device memory. Can be 0. max_seq_len Maximum sequence length to cache num_kv_heads Number of key/value heads head_dim Dimension of each attention head max_batch_size_arg Maximum number of sequences for batch processing (default: 1 for single-sequence)

Definition at line 10 of file kv_cache.cpp.

References batch_seq_lens, current_batch_size, Logger::error(), Logger::info(), layers, max_batch_size, max_seq_len_config_, seq_len, total_model_layers_, ModelConfig::use_kvcache_quantization, and Logger::warning().

Referenced by tinyllama::TinyLlamaSession::TinyLlamaSession().

◆ initialize_batch()

|

inline |

Initialize batch mode with specified number of sequences.

- Parameters

-

batch_size Number of sequences to process in batch

Definition at line 201 of file model.h.

References batch_seq_lens, current_batch_size, max_batch_size, and Logger::warning().

Referenced by tinyllama::TinyLlamaSession::generate_batch().

Member Data Documentation

◆ batch_seq_lens

| std::vector<int> KVCache::batch_seq_lens |

Sequence lengths for each sequence in batch

Definition at line 158 of file model.h.

Referenced by clear_data(), TinyLlamaModel::forward_cpu_batch_generation(), initialize(), and initialize_batch().

◆ current_batch_size

| int KVCache::current_batch_size = 0 |

Current number of active sequences

Definition at line 160 of file model.h.

Referenced by clear_data(), TinyLlamaModel::forward_cpu_batch_generation(), initialize(), and initialize_batch().

◆ layers

| std::vector<KVCacheLayer> KVCache::layers |

KV cache for each layer

Definition at line 152 of file model.h.

Referenced by clear_data(), TinyLlamaModel::forward(), CPUBatchProcessor::forward_cpu_batch(), TinyLlamaModel::forward_cpu_batch_generation(), initialize(), update_kv_cache_batch_cpu(), and update_kv_cache_batch_cpu_sequence_aware().

◆ max_batch_size

| int KVCache::max_batch_size = 1 |

Maximum number of sequences that can be cached

Definition at line 159 of file model.h.

Referenced by initialize(), initialize_batch(), update_kv_cache_batch_cpu(), and update_kv_cache_batch_cpu_sequence_aware().

◆ max_seq_len_config_

| int KVCache::max_seq_len_config_ = 0 |

Store the original max_seq_len

Definition at line 163 of file model.h.

Referenced by TinyLlamaModel::forward(), CPUBatchProcessor::forward_cpu_batch(), TinyLlamaModel::forward_cpu_batch_generation(), initialize(), update_kv_cache_batch_cpu(), and update_kv_cache_batch_cpu_sequence_aware().

◆ seq_len

| int KVCache::seq_len = 0 |

Current sequence length (single-sequence mode)

Definition at line 155 of file model.h.

Referenced by clear_data(), CPUBatchProcessor::forward_cpu_batch(), TinyLlamaModel::forward_cpu_batch_generation(), tinyllama::TinyLlamaSession::generate(), tinyllama::TinyLlamaSession::generate_batch(), and initialize().

◆ total_model_layers_

| int KVCache::total_model_layers_ = 0 |

Total number of layers in the model

Definition at line 162 of file model.h.

Referenced by initialize().

The documentation for this struct was generated from the following files: