#include "tokenizer.h"#include <algorithm>#include <cctype>#include <fstream>#include <iomanip>#include <iostream>#include <map>#include <nlohmann/json.hpp>#include <queue>#include <boost/regex.hpp>#include <boost/xpressive/xpressive.hpp>#include <sstream>#include <stdexcept>#include <unordered_set>#include <vector>#include <string>#include <limits>#include <utility>#include <functional>#include <filesystem>#include "logger.h"

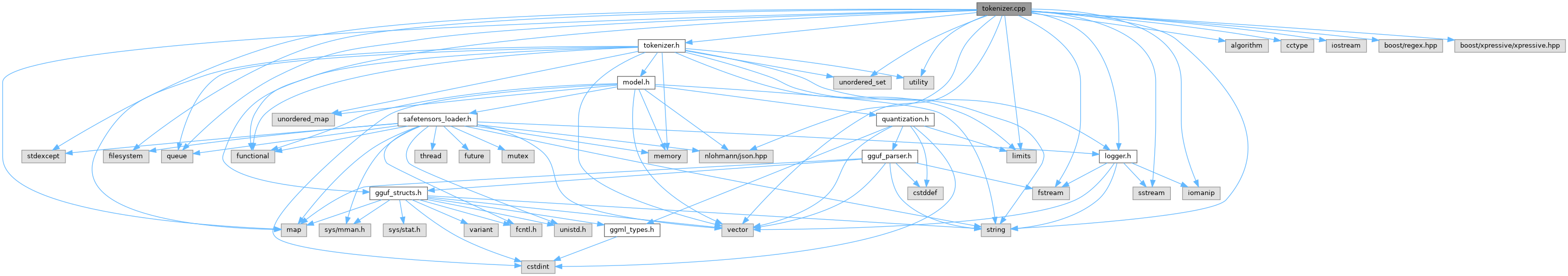

Include dependency graph for tokenizer.cpp:

Go to the source code of this file.

Typedefs | |

| using | json = nlohmann::json |

Functions | |

| bool | is_numeric (const std::string &s) |

| static std::unordered_map< std::string, int > | generate_bpe_merges_from_vocab_scores (const std::vector< std::string > &id_to_token, const std::vector< float > &token_scores) |

| static std::string | replace_all (std::string str, const std::string &from, const std::string &to) |

| static std::vector< std::pair< std::string, int > > | sort_tokens_by_length_desc (const std::unordered_map< std::string, int > &tokens_map) |

Variables | |

| const std::string | BPE_SPACE_CHAR = "\xC4\xA0" |

Typedef Documentation

◆ json

| using json = nlohmann::json |

Definition at line 28 of file tokenizer.cpp.

Function Documentation

◆ generate_bpe_merges_from_vocab_scores()

|

static |

Definition at line 422 of file tokenizer.cpp.

424 {

425

426 std::unordered_map<std::string, int> generated_merges;

427

428 if (token_scores.empty() || id_to_token.empty()) {

430 return generated_merges;

431 }

432

434

435 // Create a list of tokens with their scores, sorted by score (higher score = higher priority)

436 std::vector<std::pair<float, std::string>> scored_tokens;

437 for (size_t id = 0; id < id_to_token.size(); ++id) {

438 if (id < token_scores.size()) {

439 const std::string& token = id_to_token[id];

440 // Skip special tokens and single characters

441 if (token.length() > 1 &&

442 token.find("<") == std::string::npos &&

443 token.find(">") == std::string::npos &&

444 token != "▁") { // Skip SentencePiece space token

445 scored_tokens.emplace_back(token_scores[id], token);

446 }

447 }

448 }

449

450 // Sort by score (descending - higher scores first)

451 std::sort(scored_tokens.begin(), scored_tokens.end(),

452 [](const auto& a, const auto& b) { return a.first > b.first; });

453

454 Logger::info("Found " + std::to_string(scored_tokens.size()) + " candidate tokens for merge generation");

455

456 // Generate merges by finding tokens that can be decomposed into pairs

457 int merge_rank = 0;

458 std::unordered_set<std::string> processed_tokens;

459

460 for (const auto& [score, token] : scored_tokens) {

461 if (processed_tokens.count(token)) continue;

462

463 // Try to find the best split point for this token

464 std::string best_left, best_right;

465 float best_combined_score = -std::numeric_limits<float>::infinity();

466

467 // Try all possible split points

468 for (size_t split = 1; split < token.length(); ++split) {

469 std::string left = token.substr(0, split);

470 std::string right = token.substr(split);

471

472 // Check if both parts exist in vocabulary

473 auto left_it = std::find(id_to_token.begin(), id_to_token.end(), left);

474 auto right_it = std::find(id_to_token.begin(), id_to_token.end(), right);

475

476 if (left_it != id_to_token.end() && right_it != id_to_token.end()) {

477 // Both parts exist, calculate combined score

478 size_t left_id = std::distance(id_to_token.begin(), left_it);

479 size_t right_id = std::distance(id_to_token.begin(), right_it);

480 float left_score = (left_id < token_scores.size()) ?

481 token_scores[left_id] : 0.0f;

482 float right_score = (right_id < token_scores.size()) ?

483 token_scores[right_id] : 0.0f;

484 float combined_score = left_score + right_score;

485

486 if (combined_score > best_combined_score) {

487 best_combined_score = combined_score;

488 best_left = left;

489 best_right = right;

490 }

491 }

492 }

493

494 // If we found a valid decomposition, add it as a merge rule

495 if (!best_left.empty() && !best_right.empty()) {

496 std::string merge_key = best_left + best_right;

497 if (generated_merges.find(merge_key) == generated_merges.end()) {

498 generated_merges[merge_key] = merge_rank++;

499 Logger::debug("Generated merge: '" + best_left + "' + '" + best_right + "' -> '" + token + "' (rank " + std::to_string(merge_rank-1) + ")");

500 }

501 }

502

503 processed_tokens.insert(token);

504

505 // Limit the number of merges to prevent excessive computation

506 if (merge_rank >= 50000) {

508 break;

509 }

510 }

511

512 Logger::info("Generated " + std::to_string(generated_merges.size()) + " BPE merge rules from vocabulary and scores");

513 return generated_merges;

514}

References Logger::debug(), Logger::info(), and Logger::warning().

Referenced by Tokenizer::Tokenizer().

◆ is_numeric()

| bool is_numeric | ( | const std::string & | s | ) |

Definition at line 36 of file tokenizer.cpp.

36 {

37 if (s.empty()) {

38 return false; // An empty string is not considered numeric

39 }

40 for (char c : s) {

41 if (!std::isdigit(static_cast<unsigned char>(c))) {

42 return false; // Found a non-digit character

43 }

44 }

45 return true; // All characters are digits

46}

◆ replace_all()

|

static |

Definition at line 1619 of file tokenizer.cpp.

1619 {

1620 size_t start_pos = 0;

1621 while((start_pos = str.find(from, start_pos)) != std::string::npos) {

1622 str.replace(start_pos, from.length(), to);

1623 start_pos += to.length(); // Handles cases where 'to' is a substring of 'from'

1624 }

1625 return str;

1626}

Referenced by Tokenizer::apply_chat_template().

◆ sort_tokens_by_length_desc()

|

static |

Definition at line 2080 of file tokenizer.cpp.

2080 {

2081 std::vector<std::pair<std::string, int>> sorted_tokens;

2082 for (const auto& pair : tokens_map) {

2083 sorted_tokens.push_back(pair);

2084 }

2085 std::sort(sorted_tokens.begin(), sorted_tokens.end(),

2086 [](const auto& a, const auto& b) {

2087 return a.first.length() > b.first.length();

2088 });

2089 return sorted_tokens;

2090}

Referenced by Tokenizer::bpe_tokenize_to_ids().

Variable Documentation

◆ BPE_SPACE_CHAR

| const std::string BPE_SPACE_CHAR = "\xC4\xA0" |

Definition at line 26 of file tokenizer.cpp.

Referenced by Tokenizer::add_bigram_to_queue_refactored(), Tokenizer::bpe_tokenize_to_ids(), Tokenizer::decode(), and Tokenizer::Tokenizer().