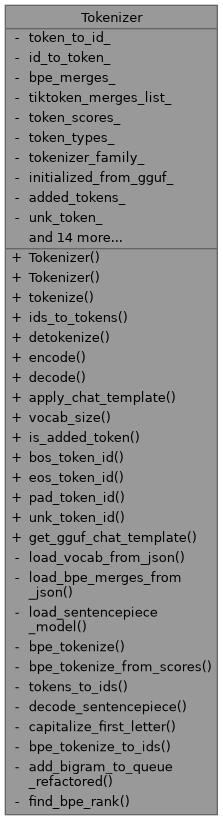

A lightweight tokenizer implementation for text processing. More...

#include <tokenizer.h>

Public Types | |

| enum | PreTokenizeMethod { DEFAULT , LLAMA_REGEX } |

| Enumeration of available pre-tokenization methods. More... | |

| enum class | Type { UNKNOWN , SENTENCEPIECE_BPE , TIKTOKEN_BPE } |

Public Member Functions | |

| Tokenizer (const std::string &vocab_path, const std::string &model_path, const ModelConfig &config) | |

| Constructs a tokenizer from vocabulary and model files (for Llama 2 style JSON) | |

| Tokenizer (const GGUFData &gguf_data, const ModelConfig &config) | |

| Constructs a tokenizer from GGUF format data. | |

| std::vector< std::string > | tokenize (const std::string &text) const |

| Tokenizes input text into token strings. | |

| std::vector< std::string > | ids_to_tokens (const std::vector< int > &ids) const |

| Converts token IDs back to token strings. | |

| std::string | detokenize (const std::vector< std::string > &tokens) const |

| Combines tokens back into text. | |

| std::vector< int > | encode (const std::string &text, bool add_bos=true, bool add_eos=false, PreTokenizeMethod pre_tok_override=PreTokenizeMethod::DEFAULT) const |

| Encodes text into token IDs with optional special tokens. | |

| std::string | decode (const std::vector< int > &ids, bool skip_special_tokens=true) const |

| Decodes token IDs back to text. | |

| std::string | apply_chat_template (const std::string &user_prompt, const std::string &system_message, const ModelConfig &config) const |

| Applies chat template formatting to the input prompt. | |

| int | vocab_size () const |

| Returns the size of the vocabulary. | |

| bool | is_added_token (int id) const |

| Checks if a token ID represents an added token. | |

| int | bos_token_id () const |

| Gets the beginning-of-sequence token ID. | |

| int | eos_token_id () const |

| Gets the end-of-sequence token ID. | |

| int | pad_token_id () const |

| Gets the padding token ID. | |

| int | unk_token_id () const |

| Gets the unknown token ID. | |

| const std::string & | get_gguf_chat_template () const |

Private Member Functions | |

| void | load_vocab_from_json (const std::string &vocab_path, std::unordered_map< std::string, int > &token_to_id, std::vector< std::string > &id_to_token) |

| Loads vocabulary from JSON file. | |

| void | load_bpe_merges_from_json (const std::string &model_path) |

| Loads BPE merge rules from JSON file. | |

| void | load_sentencepiece_model (const std::string &model_path) |

| Loads a SentencePiece model. | |

| std::vector< std::string > | bpe_tokenize (const std::string &text) const |

| std::vector< std::string > | bpe_tokenize_from_scores (const std::string &text) const |

| std::vector< int > | tokens_to_ids (const std::vector< std::string > &tokens) const |

| std::string | decode_sentencepiece (const std::vector< int > &ids, bool skip_special_tokens) const |

| std::string | capitalize_first_letter (std::string s) const |

| std::vector< int > | bpe_tokenize_to_ids (const std::string &text, bool add_bos_token_param, bool add_eos_token_param, bool ignore_merges_param) const |

| void | add_bigram_to_queue_refactored (const char *text_data_base, const std::vector< llm_symbol > &symbols, llm_symbol::index first_symbol_idx, std::priority_queue< std::pair< int, int >, std::vector< std::pair< int, int > >, std::greater< std::pair< int, int > > > &work_queue) const |

| int | find_bpe_rank (const std::string &token_left, const std::string &token_right) const |

Private Attributes | |

| std::unordered_map< std::string, int > | token_to_id_ |

| std::vector< std::string > | id_to_token_ |

| std::unordered_map< std::string, int > | bpe_merges_ |

| std::vector< std::string > | tiktoken_merges_list_ |

| std::vector< float > | token_scores_ |

| std::vector< int32_t > | token_types_ |

| ModelConfig::TokenizerFamily | tokenizer_family_ = ModelConfig::TokenizerFamily::UNKNOWN |

| bool | initialized_from_gguf_ = false |

| std::unordered_map< std::string, int > | added_tokens_ |

| std::string | unk_token_ |

| std::string | bos_token_ |

| std::string | eos_token_ |

| std::string | pad_token_ |

| int | unk_token_id_ = 0 |

| int | bos_token_id_ = -1 |

| int | eos_token_id_ = -1 |

| int | pad_token_id_ = -1 |

| bool | sentencepiece_model_loaded_ = false |

| std::string | pre_tok_type_ = "unknown" |

| std::unordered_map< int, std::string > | id_to_added_token_ |

| std::unordered_set< std::string > | chat_template_special_tokens |

| std::unordered_map< char, int > | byte_char_to_id_ |

| Type | type_ = Type::UNKNOWN |

| std::string | gguf_chat_template_ |

Detailed Description

A lightweight tokenizer implementation for text processing.

This tokenizer class provides basic text tokenization functionality without external dependencies. It supports multiple tokenization methods including space-based, BPE (Byte-Pair Encoding), and regex-based tokenization. The tokenizer can be initialized either from a vocabulary file or from GGUF format data.

Definition at line 61 of file tokenizer.h.

Member Enumeration Documentation

◆ PreTokenizeMethod

Enumeration of available pre-tokenization methods.

| Enumerator | |

|---|---|

| DEFAULT | Use the default tokenization method specified during initialization |

| LLAMA_REGEX | LLaMA-style regex-based tokenization |

Definition at line 66 of file tokenizer.h.

◆ Type

|

strong |

| Enumerator | |

|---|---|

| UNKNOWN | |

| SENTENCEPIECE_BPE | |

| TIKTOKEN_BPE | |

Definition at line 71 of file tokenizer.h.

Constructor & Destructor Documentation

◆ Tokenizer() [1/2]

| Tokenizer::Tokenizer | ( | const std::string & | vocab_path, |

| const std::string & | model_path, | ||

| const ModelConfig & | config | ||

| ) |

Constructs a tokenizer from vocabulary and model files (for Llama 2 style JSON)

- Parameters

-

vocab_path Path to the JSON vocabulary file model_path Path to the JSON model file containing BPE merges (optional) config The model configuration (used to get special token IDs if not in vocab file)

Definition at line 285 of file tokenizer.cpp.

References added_tokens_, bos_token_, ModelConfig::bos_token_id, bos_token_id_, Logger::debug(), eos_token_, ModelConfig::eos_token_id, eos_token_id_, Logger::error(), id_to_added_token_, id_to_token_, Logger::info(), ModelConfig::LLAMA3_TIKTOKEN, ModelConfig::LLAMA_SENTENCEPIECE, load_bpe_merges_from_json(), load_sentencepiece_model(), load_vocab_from_json(), pad_token_, pad_token_id_, token_to_id_, tokenizer_family_, unk_token_, unk_token_id_, vocab_size(), and Logger::warning().

◆ Tokenizer() [2/2]

|

explicit |

Constructs a tokenizer from GGUF format data.

- Parameters

-

gguf_data The GGUF data containing tokenizer information config The model configuration (contains tokenizer_family, special token IDs etc.)

Definition at line 516 of file tokenizer.cpp.

References added_tokens_, bos_token_, ModelConfig::bos_token_id, bos_token_id_, bpe_merges_, BPE_SPACE_CHAR, byte_char_to_id_, Logger::debug(), eos_token_, ModelConfig::eos_token_id, eos_token_id_, Logger::error(), generate_bpe_merges_from_vocab_scores(), gguf_chat_template_, id_to_added_token_, id_to_token_, Logger::info(), ModelConfig::LLAMA3_TIKTOKEN, ModelConfig::LLAMA_SENTENCEPIECE, GGUFData::metadata, pad_token_, ModelConfig::pad_token_id, pad_token_id_, SENTENCEPIECE_BPE, TIKTOKEN_BPE, token_scores_, token_to_id_, token_types_, tokenizer_family_, GGUFData::tokenizer_merges, GGUFData::tokenizer_scores, GGUFData::tokenizer_token_types, GGUFData::tokenizer_tokens, type_, unk_token_, ModelConfig::unk_token_id, unk_token_id_, UNKNOWN, and Logger::warning().

Member Function Documentation

◆ add_bigram_to_queue_refactored()

|

private |

Definition at line 2363 of file tokenizer.cpp.

References bpe_merges_, BPE_SPACE_CHAR, Logger::debug(), Logger::error(), llm_symbol::n, llm_symbol::next, and llm_symbol::text.

Referenced by bpe_tokenize_to_ids().

◆ apply_chat_template()

| std::string Tokenizer::apply_chat_template | ( | const std::string & | user_prompt, |

| const std::string & | system_message, | ||

| const ModelConfig & | config | ||

| ) | const |

Applies chat template formatting to the input prompt.

- Parameters

-

user_prompt The user's input text system_message The system message to prepend config Model configuration containing template information

- Returns

- Formatted chat text

Definition at line 1628 of file tokenizer.cpp.

References added_tokens_, bos_token_, bos_token_id_, Logger::debug(), eos_token_, eos_token_id_, gguf_chat_template_, Logger::info(), replace_all(), token_to_id_, and Logger::warning().

Referenced by main().

◆ bos_token_id()

|

inline |

Gets the beginning-of-sequence token ID.

- Returns

- BOS token ID

Definition at line 163 of file tokenizer.h.

References bos_token_id_.

◆ bpe_tokenize()

|

private |

Definition at line 1975 of file tokenizer.cpp.

References bpe_merges_, Logger::debug(), and Logger::warning().

Referenced by encode().

◆ bpe_tokenize_from_scores()

|

private |

Definition at line 59 of file tokenizer.cpp.

References bpe_merges_, Logger::debug(), and Logger::warning().

Referenced by encode().

◆ bpe_tokenize_to_ids()

|

private |

Definition at line 2094 of file tokenizer.cpp.

References add_bigram_to_queue_refactored(), added_tokens_, bos_token_id_, BPE_SPACE_CHAR, byte_char_to_id_, Logger::debug(), eos_token_id_, Logger::error(), llm_symbol::n, sort_tokens_by_length_desc(), llm_symbol::text, token_to_id_, unk_token_id_, and Logger::warning().

Referenced by encode().

◆ capitalize_first_letter()

|

private |

Definition at line 1945 of file tokenizer.cpp.

Referenced by tokens_to_ids().

◆ decode()

| std::string Tokenizer::decode | ( | const std::vector< int > & | ids, |

| bool | skip_special_tokens = true |

||

| ) | const |

Decodes token IDs back to text.

- Parameters

-

ids Vector of token IDs to decode skip_special_tokens Whether to skip special tokens in output

- Returns

- The decoded text

Definition at line 1394 of file tokenizer.cpp.

References bos_token_id_, BPE_SPACE_CHAR, Logger::debug(), decode_sentencepiece(), eos_token_id_, id_to_added_token_, id_to_token_, ModelConfig::LLAMA_SENTENCEPIECE, pad_token_id_, tokenizer_family_, unk_token_, and unk_token_id_.

◆ decode_sentencepiece()

|

private |

Definition at line 1476 of file tokenizer.cpp.

References bos_token_id_, Logger::debug(), eos_token_id_, id_to_added_token_, id_to_token_, pad_token_id_, unk_token_, unk_token_id_, and Logger::warning().

Referenced by decode().

◆ detokenize()

| std::string Tokenizer::detokenize | ( | const std::vector< std::string > & | tokens | ) | const |

Combines tokens back into text.

- Parameters

-

tokens Vector of token strings to combine

- Returns

- The reconstructed text

Definition at line 1050 of file tokenizer.cpp.

◆ encode()

| std::vector< int > Tokenizer::encode | ( | const std::string & | text, |

| bool | add_bos = true, |

||

| bool | add_eos = false, |

||

| PreTokenizeMethod | pre_tok_override = PreTokenizeMethod::DEFAULT |

||

| ) | const |

Encodes text into token IDs with optional special tokens.

- Parameters

-

text Text to encode add_bos Whether to add beginning-of-sequence token add_eos Whether to add end-of-sequence token pre_tok_override Override the default pre-tokenization method

- Returns

- Vector of token IDs

Definition at line 1141 of file tokenizer.cpp.

References added_tokens_, bos_token_, bos_token_id_, bpe_tokenize(), bpe_tokenize_from_scores(), bpe_tokenize_to_ids(), Logger::debug(), DEFAULT, eos_token_, eos_token_id_, Logger::error(), initialized_from_gguf_, ModelConfig::LLAMA3_TIKTOKEN, LLAMA_REGEX, ModelConfig::LLAMA_SENTENCEPIECE, pre_tok_type_, token_to_id_, tokenizer_family_, tokens_to_ids(), unk_token_, unk_token_id_, and Logger::warning().

◆ eos_token_id()

|

inline |

Gets the end-of-sequence token ID.

- Returns

- EOS token ID

Definition at line 169 of file tokenizer.h.

References eos_token_id_.

◆ find_bpe_rank()

|

private |

Definition at line 51 of file tokenizer.cpp.

References bpe_merges_.

◆ get_gguf_chat_template()

| const std::string & Tokenizer::get_gguf_chat_template | ( | ) | const |

Definition at line 2075 of file tokenizer.cpp.

References gguf_chat_template_.

Referenced by main().

◆ ids_to_tokens()

| std::vector< std::string > Tokenizer::ids_to_tokens | ( | const std::vector< int > & | ids | ) | const |

Converts token IDs back to token strings.

- Parameters

-

ids Vector of token IDs to convert

- Returns

- Vector of token strings

Definition at line 258 of file tokenizer.cpp.

References id_to_added_token_, id_to_token_, unk_token_, and Logger::warning().

◆ is_added_token()

| bool Tokenizer::is_added_token | ( | int | id | ) | const |

Checks if a token ID represents an added token.

- Parameters

-

id Token ID to check

- Returns

- True if token was added, false otherwise

◆ load_bpe_merges_from_json()

|

private |

Loads BPE merge rules from JSON file.

Definition at line 1860 of file tokenizer.cpp.

References bpe_merges_, Logger::info(), and Logger::warning().

Referenced by Tokenizer().

◆ load_sentencepiece_model()

|

private |

Loads a SentencePiece model.

Definition at line 1855 of file tokenizer.cpp.

References sentencepiece_model_loaded_, and Logger::warning().

Referenced by Tokenizer().

◆ load_vocab_from_json()

|

private |

Loads vocabulary from JSON file.

Definition at line 1708 of file tokenizer.cpp.

References added_tokens_, bos_token_, bos_token_id_, Logger::debug(), eos_token_, eos_token_id_, id_to_added_token_, Logger::info(), pad_token_, pad_token_id_, unk_token_, unk_token_id_, and Logger::warning().

Referenced by Tokenizer().

◆ pad_token_id()

|

inline |

Gets the padding token ID.

- Returns

- PAD token ID

Definition at line 175 of file tokenizer.h.

References pad_token_id_.

◆ tokenize()

| std::vector< std::string > Tokenizer::tokenize | ( | const std::string & | text | ) | const |

Tokenizes input text into token strings.

- Parameters

-

text The input text to tokenize

- Returns

- Vector of token strings

◆ tokens_to_ids()

|

private |

Definition at line 171 of file tokenizer.cpp.

References added_tokens_, byte_char_to_id_, capitalize_first_letter(), Logger::debug(), token_to_id_, and unk_token_id_.

Referenced by encode().

◆ unk_token_id()

|

inline |

Gets the unknown token ID.

- Returns

- UNK token ID

Definition at line 181 of file tokenizer.h.

References unk_token_id_.

◆ vocab_size()

| int Tokenizer::vocab_size | ( | ) | const |

Returns the size of the vocabulary.

- Returns

- Number of tokens in vocabulary

Referenced by Tokenizer().

Member Data Documentation

◆ added_tokens_

|

private |

Additional tokens

Definition at line 213 of file tokenizer.h.

Referenced by apply_chat_template(), bpe_tokenize_to_ids(), encode(), load_vocab_from_json(), Tokenizer(), Tokenizer(), and tokens_to_ids().

◆ bos_token_

|

private |

Beginning of sequence token string

Definition at line 217 of file tokenizer.h.

Referenced by apply_chat_template(), encode(), load_vocab_from_json(), Tokenizer(), and Tokenizer().

◆ bos_token_id_

|

private |

Beginning of sequence token ID

Definition at line 223 of file tokenizer.h.

Referenced by apply_chat_template(), bos_token_id(), bpe_tokenize_to_ids(), decode(), decode_sentencepiece(), encode(), load_vocab_from_json(), Tokenizer(), and Tokenizer().

◆ bpe_merges_

|

private |

BPE merge rules (rank/order based for SentencePiece/Tiktoken)

Definition at line 207 of file tokenizer.h.

Referenced by add_bigram_to_queue_refactored(), bpe_tokenize(), bpe_tokenize_from_scores(), find_bpe_rank(), load_bpe_merges_from_json(), and Tokenizer().

◆ byte_char_to_id_

|

private |

Byte-level character mapping

Definition at line 231 of file tokenizer.h.

Referenced by bpe_tokenize_to_ids(), Tokenizer(), and tokens_to_ids().

◆ chat_template_special_tokens

|

private |

Special tokens for chat

Definition at line 230 of file tokenizer.h.

◆ eos_token_

|

private |

End of sequence token string

Definition at line 218 of file tokenizer.h.

Referenced by apply_chat_template(), encode(), load_vocab_from_json(), Tokenizer(), and Tokenizer().

◆ eos_token_id_

|

private |

End of sequence token ID

Definition at line 224 of file tokenizer.h.

Referenced by apply_chat_template(), bpe_tokenize_to_ids(), decode(), decode_sentencepiece(), encode(), eos_token_id(), load_vocab_from_json(), Tokenizer(), and Tokenizer().

◆ gguf_chat_template_

|

private |

Chat template string from GGUF metadata

Definition at line 234 of file tokenizer.h.

Referenced by apply_chat_template(), get_gguf_chat_template(), and Tokenizer().

◆ id_to_added_token_

|

private |

Maps IDs to added tokens

Definition at line 229 of file tokenizer.h.

Referenced by decode(), decode_sentencepiece(), ids_to_tokens(), load_vocab_from_json(), Tokenizer(), and Tokenizer().

◆ id_to_token_

|

private |

Maps IDs to their tokens

Definition at line 206 of file tokenizer.h.

Referenced by decode(), decode_sentencepiece(), ids_to_tokens(), Tokenizer(), and Tokenizer().

◆ initialized_from_gguf_

|

private |

◆ pad_token_

|

private |

Padding token string

Definition at line 219 of file tokenizer.h.

Referenced by load_vocab_from_json(), Tokenizer(), and Tokenizer().

◆ pad_token_id_

|

private |

Padding token ID

Definition at line 225 of file tokenizer.h.

Referenced by decode(), decode_sentencepiece(), load_vocab_from_json(), pad_token_id(), Tokenizer(), and Tokenizer().

◆ pre_tok_type_

|

private |

◆ sentencepiece_model_loaded_

|

private |

SentencePiece model status

Definition at line 227 of file tokenizer.h.

Referenced by load_sentencepiece_model().

◆ tiktoken_merges_list_

|

private |

Tiktoken BPE merge rules, loaded as ordered list from GGUF

Definition at line 208 of file tokenizer.h.

◆ token_scores_

|

private |

Token scores for BPE (primarily for Llama2 GGUF)

Definition at line 209 of file tokenizer.h.

Referenced by Tokenizer().

◆ token_to_id_

|

private |

Maps tokens to their IDs

Definition at line 205 of file tokenizer.h.

Referenced by apply_chat_template(), bpe_tokenize_to_ids(), encode(), Tokenizer(), Tokenizer(), and tokens_to_ids().

◆ token_types_

|

private |

Token type information from GGUF

Definition at line 210 of file tokenizer.h.

Referenced by Tokenizer().

◆ tokenizer_family_

|

private |

Definition at line 211 of file tokenizer.h.

Referenced by decode(), encode(), Tokenizer(), and Tokenizer().

◆ type_

|

private |

Definition at line 233 of file tokenizer.h.

Referenced by Tokenizer().

◆ unk_token_

|

private |

Unknown token string

Definition at line 216 of file tokenizer.h.

Referenced by decode(), decode_sentencepiece(), encode(), ids_to_tokens(), load_vocab_from_json(), Tokenizer(), and Tokenizer().

◆ unk_token_id_

|

private |

Unknown token ID

Definition at line 222 of file tokenizer.h.

Referenced by bpe_tokenize_to_ids(), decode(), decode_sentencepiece(), encode(), load_vocab_from_json(), Tokenizer(), Tokenizer(), tokens_to_ids(), and unk_token_id().

The documentation for this class was generated from the following files: