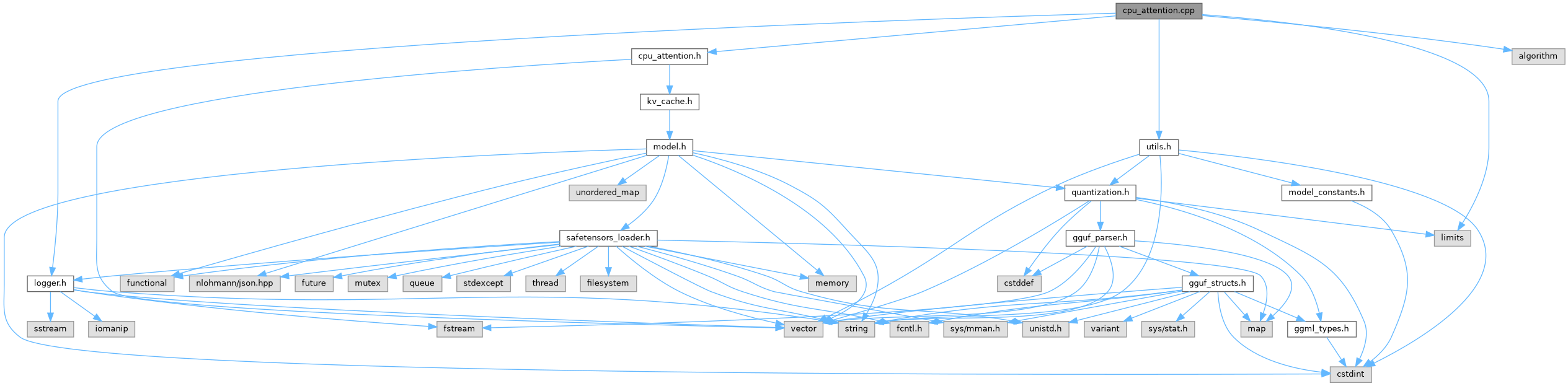

#include "cpu_attention.h"#include "logger.h"#include "utils.h"#include <algorithm>#include <limits>

Go to the source code of this file.

Functions | |

| void | update_kv_cache_batch_cpu (KVCache *kv_cache, int layer_idx, const std::vector< float > &k_batch_for_layer, const std::vector< float > &v_batch_for_layer, int num_tokens_in_batch, int start_pos_in_sequence, int num_kv_heads, int head_dim) |

| void | attention_batch_cpu (const std::vector< float > &q_batch_roped, KVCacheLayer ¤t_layer_kv_cache, std::vector< float > &batch_attn_output, int num_tokens_in_batch, int start_pos_in_sequence, int num_q_heads, int num_kv_heads, int head_dim, float attention_scale) |

| void | update_kv_cache_batch_cpu_sequence_aware (KVCache *kv_cache, int layer_idx, const std::vector< float > &k_batch_for_layer, const std::vector< float > &v_batch_for_layer, int num_tokens_in_batch, const std::vector< int > &sequence_indices, const std::vector< int > &position_in_sequence, int num_kv_heads, int head_dim) |

| void | attention_batch_cpu_sequence_aware (const std::vector< float > &q_batch_roped, KVCacheLayer ¤t_layer_kv_cache, std::vector< float > &batch_attn_output, int num_tokens_in_batch, const std::vector< int > &sequence_indices, const std::vector< int > &position_in_sequence, int num_q_heads, int num_kv_heads, int head_dim, float attention_scale, int max_seq_len_per_sequence) |

Function Documentation

◆ attention_batch_cpu()

| void attention_batch_cpu | ( | const std::vector< float > & | q_batch_roped, |

| KVCacheLayer & | current_layer_kv_cache, | ||

| std::vector< float > & | batch_attn_output, | ||

| int | num_tokens_in_batch, | ||

| int | start_pos_in_sequence, | ||

| int | num_q_heads, | ||

| int | num_kv_heads, | ||

| int | head_dim, | ||

| float | attention_scale | ||

| ) |

Definition at line 91 of file cpu_attention.cpp.

References Logger::error(), Logger::info(), KVCacheLayer::k, softmax_vector_cpu(), KVCacheLayer::v, and Logger::warning().

Referenced by CPUBatchProcessor::forward_cpu_batch().

◆ attention_batch_cpu_sequence_aware()

| void attention_batch_cpu_sequence_aware | ( | const std::vector< float > & | q_batch_roped, |

| KVCacheLayer & | current_layer_kv_cache, | ||

| std::vector< float > & | batch_attn_output, | ||

| int | num_tokens_in_batch, | ||

| const std::vector< int > & | sequence_indices, | ||

| const std::vector< int > & | position_in_sequence, | ||

| int | num_q_heads, | ||

| int | num_kv_heads, | ||

| int | head_dim, | ||

| float | attention_scale, | ||

| int | max_seq_len_per_sequence | ||

| ) |

Definition at line 254 of file cpu_attention.cpp.

References Logger::error(), KVCacheLayer::k, softmax_vector_cpu(), and KVCacheLayer::v.

Referenced by CPUBatchProcessor::forward_cpu_batch().

◆ update_kv_cache_batch_cpu()

| void update_kv_cache_batch_cpu | ( | KVCache * | kv_cache, |

| int | layer_idx, | ||

| const std::vector< float > & | k_batch_for_layer, | ||

| const std::vector< float > & | v_batch_for_layer, | ||

| int | num_tokens_in_batch, | ||

| int | start_pos_in_sequence, | ||

| int | num_kv_heads, | ||

| int | head_dim | ||

| ) |

Definition at line 7 of file cpu_attention.cpp.

References Logger::error(), Logger::info(), KVCacheLayer::k, KVCache::layers, KVCache::max_batch_size, KVCache::max_seq_len_config_, and KVCacheLayer::v.

Referenced by CPUBatchProcessor::forward_cpu_batch().

◆ update_kv_cache_batch_cpu_sequence_aware()

| void update_kv_cache_batch_cpu_sequence_aware | ( | KVCache * | kv_cache, |

| int | layer_idx, | ||

| const std::vector< float > & | k_batch_for_layer, | ||

| const std::vector< float > & | v_batch_for_layer, | ||

| int | num_tokens_in_batch, | ||

| const std::vector< int > & | sequence_indices, | ||

| const std::vector< int > & | position_in_sequence, | ||

| int | num_kv_heads, | ||

| int | head_dim | ||

| ) |

Definition at line 203 of file cpu_attention.cpp.

References Logger::error(), KVCacheLayer::k, KVCache::layers, KVCache::max_batch_size, KVCache::max_seq_len_config_, and KVCacheLayer::v.

Referenced by CPUBatchProcessor::forward_cpu_batch().