Weight quantization structures and functions for model compression. More...

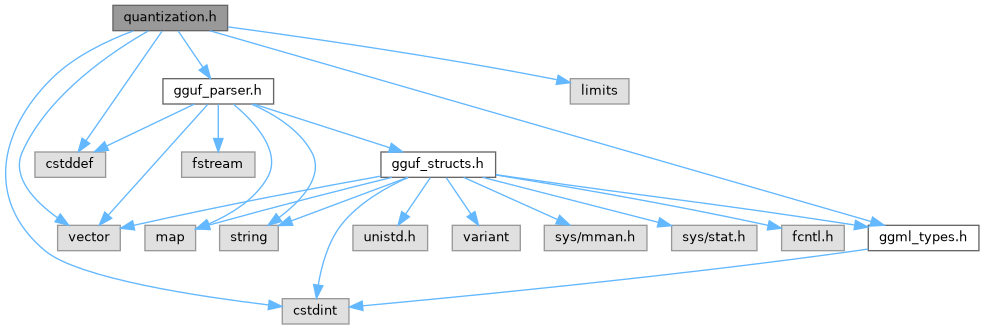

#include <cstddef>#include <cstdint>#include <limits>#include <vector>#include "ggml_types.h"#include "gguf_parser.h"

Go to the source code of this file.

Classes | |

| struct | block_q4_K |

| 4-bit K-quantized block structure More... | |

| struct | block_q6_K |

| 6-bit K-quantized block structure More... | |

| struct | block_q2_K |

| 2-bit K-quantized block structure More... | |

| struct | block_q3_K |

| 3-bit K-quantized block structure More... | |

| struct | block_q8_K |

| 8-bit K-quantized block structure with block sums More... | |

| struct | block_q8_0 |

| Simple 8-bit quantized block structure. More... | |

Macros | |

| #define | RESTRICT __restrict__ |

Functions | |

| float | fp16_to_fp32 (uint16_t h, bool is_gguf_scale_field=false) |

| Converts a 16-bit floating point number to 32-bit float. | |

| uint16_t | fp32_to_fp16 (float f) |

| Converts a 32-bit float to 16-bit floating point. | |

| const char * | ggml_type_name (GGMLType type) |

| Gets the string name of a GGML type. | |

| size_t | ggml_type_size (GGMLType type) |

| Gets the size in bytes of a GGML type. | |

| size_t | ggml_type_block_size (GGMLType type) |

| Gets the block size for a GGML type. | |

| void | dequantize_q2_k (const void *q_data, float *f_data, int num_weights_in_block, bool log_details_for_this_block=false) |

| Dequantizes a Q2_K quantized block to float32. | |

| void | dequantize_q4_k_m (const block_q4_K *qblock, float *RESTRICT output_f32, int num_elements, bool log_this_block=false) |

| Dequantizes a Q4_K quantized block to float32. | |

| void | dequantize_q6_k (const block_q6_K *qblock, float *RESTRICT output_f32, int num_elements, bool log_this_block=false) |

| Dequantizes a Q6_K quantized block to float32. | |

| void | dequantize_vector_q6k_to_f32 (const std::vector< block_q6_K > &q_weights, std::vector< float > &f32_weights, size_t total_num_elements, int log_first_n_blocks=0) |

| Dequantizes a vector of Q6_K blocks to a vector of float32. | |

| void | dequantize_q3_k (const void *q_data, float *f_data, int num_weights_in_block) |

| Dequantizes a Q3_K quantized block to float32. | |

| void | handle_i8_tensor (const void *i8_data, float *f_data, size_t num_elements) |

| Handles conversion of int8 tensor data to float32. | |

| void | quantize_q4_k_m (const float *f_data, void *q_data, int num_elements) |

| Quantizes float32 data to Q4_K format. | |

| void | quantize_q6_k (const float *f_data, void *q_data, int num_elements) |

| Quantizes float32 data to Q6_K format. | |

| std::vector< block_q8_K > | quantize_fp32_to_q8_K (const std::vector< float > &f_data) |

| Quantizes float32 data to Q8_K format. | |

| float | vec_dot_q6_k_q8_k_cpu (int n, const std::vector< block_q6_K > &x, const std::vector< block_q8_K > &y, bool log_this_call) |

| Computes dot product between Q6_K and Q8_K vectors on CPU. | |

| void | matvec_q6k_q8k_cpu (const std::vector< block_q6_K > &mat_q6k, const std::vector< block_q8_K > &vec_q8k, std::vector< float > &out_f32, int rows, int cols, bool log_calls) |

| Computes matrix-vector product between Q6_K matrix and Q8_K vector on CPU. | |

| float | vec_dot_q4_k_q8_k_cpu (int n, const std::vector< block_q4_K > &x_vec, const std::vector< block_q8_K > &y_vec, bool log_this_call) |

| Computes dot product between Q4_K and Q8_K vectors on CPU. | |

| void | matvec_q4k_q8k_cpu (const std::vector< block_q4_K > &mat_q4k, const std::vector< block_q8_K > &vec_q8k, std::vector< float > &out_f32, int rows, int cols, bool log_calls) |

| Computes matrix-vector product between Q4_K matrix and Q8_K vector on CPU. | |

| void | dequantize_q8_0_block (const block_q8_0 *qblock, float *output) |

| Dequantizes a Q8_0 block to float32. | |

| void | dequantize_vector_q4k_to_f32 (const std::vector< block_q4_K > &q_weights, std::vector< float > &f32_weights, size_t total_num_elements, int log_first_n_blocks=0) |

| Dequantizes a vector of Q4_K blocks to a vector of float32. | |

| void | dequantize_vector_q8_0_to_f32 (const std::vector< block_q8_0 > &q_weights, std::vector< float > &f32_weights, size_t total_num_elements, int log_first_n_blocks=0) |

| Dequantizes a vector of Q8_0 blocks to a vector of float32. | |

Detailed Description

Weight quantization structures and functions for model compression.

This file contains the definitions for various quantization formats and functions to convert between them. The quantization methods include Q2_K, Q3_K, Q4_K, Q6_K, and Q8_0 formats, each offering different compression ratios and precision tradeoffs.

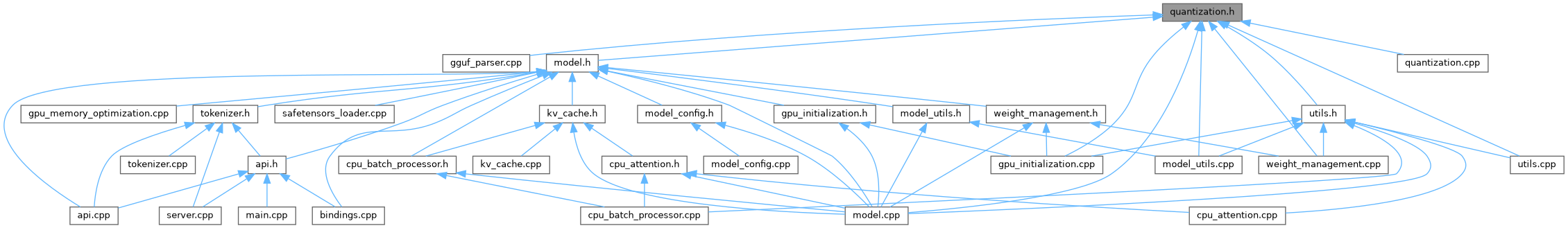

Definition in file quantization.h.

Macro Definition Documentation

◆ RESTRICT

| #define RESTRICT __restrict__ |

Definition at line 15 of file quantization.h.

Function Documentation

◆ dequantize_q2_k()

| void dequantize_q2_k | ( | const void * | q_data, |

| float * | f_data, | ||

| int | num_weights_in_block, | ||

| bool | log_details_for_this_block = false |

||

| ) |

Dequantizes a Q2_K quantized block to float32.

- Parameters

-

q_data Pointer to quantized data f_data Output float array num_weights_in_block Number of weights to dequantize log_details_for_this_block Whether to log dequantization details

◆ dequantize_q3_k()

| void dequantize_q3_k | ( | const void * | q_data, |

| float * | f_data, | ||

| int | num_weights_in_block | ||

| ) |

Dequantizes a Q3_K quantized block to float32.

- Parameters

-

q_data Pointer to quantized data f_data Output float array num_weights_in_block Number of weights to dequantize

Definition at line 476 of file quantization.cpp.

References block_q3_K::d, block_q3_K::dmin, fp16_to_fp32(), GGML_QK_K, block_q3_K::hmask, K_SCALE_VALUES, block_q3_K::qs, and block_q3_K::scales.

◆ dequantize_q4_k_m()

| void dequantize_q4_k_m | ( | const block_q4_K * | qblock, |

| float *RESTRICT | output_f32, | ||

| int | num_elements, | ||

| bool | log_this_block = false |

||

| ) |

Dequantizes a Q4_K quantized block to float32.

- Parameters

-

qblock Pointer to Q4_K block output_f32 Output float array num_elements Number of elements to dequantize log_this_block Whether to log dequantization details

◆ dequantize_q6_k()

| void dequantize_q6_k | ( | const block_q6_K * | qblock, |

| float *RESTRICT | output_f32, | ||

| int | num_elements, | ||

| bool | log_this_block = false |

||

| ) |

Dequantizes a Q6_K quantized block to float32.

- Parameters

-

qblock Pointer to Q6_K block output_f32 Output float array num_elements Number of elements to dequantize log_this_block Whether to log dequantization details

◆ dequantize_q8_0_block()

| void dequantize_q8_0_block | ( | const block_q8_0 * | qblock, |

| float * | output | ||

| ) |

Dequantizes a Q8_0 block to float32.

- Parameters

-

qblock Pointer to Q8_0 block output Output float array

Definition at line 1047 of file quantization.cpp.

References block_q8_0::d, fp16_to_fp32(), GGML_QK8_0, and block_q8_0::qs.

Referenced by dequantize_vector_q8_0_to_f32(), TinyLlamaModel::initialize_gpu_and_rope(), TinyLlamaModel::lookup_embedding(), and matvec_q8_0_f32_vector_cpu().

◆ dequantize_vector_q4k_to_f32()

| void dequantize_vector_q4k_to_f32 | ( | const std::vector< block_q4_K > & | q_weights, |

| std::vector< float > & | f32_weights, | ||

| size_t | total_num_elements, | ||

| int | log_first_n_blocks = 0 |

||

| ) |

Dequantizes a vector of Q4_K blocks to a vector of float32.

- Parameters

-

q_weights Input vector of Q4_K blocks f32_weights Output vector of float32 values (will be resized) total_num_elements Total number of float elements expected after dequantization log_first_n_blocks Number of initial blocks to log dequantization details for (0 for no logging)

Definition at line 1109 of file quantization.cpp.

References dequantize_q4_k_m(), Logger::error(), GGML_QK_K, and Logger::warning().

Referenced by TinyLlamaModel::ensure_down_proj_dequantized(), TinyLlamaModel::ensure_embed_tokens_dequantized(), TinyLlamaModel::ensure_gate_proj_dequantized(), TinyLlamaModel::ensure_k_proj_dequantized(), TinyLlamaModel::ensure_lm_head_dequantized(), TinyLlamaModel::ensure_o_proj_dequantized(), TinyLlamaModel::ensure_q_proj_dequantized(), TinyLlamaModel::ensure_up_proj_dequantized(), TinyLlamaModel::ensure_v_proj_dequantized(), and TinyLlamaModel::initialize_weights().

◆ dequantize_vector_q6k_to_f32()

| void dequantize_vector_q6k_to_f32 | ( | const std::vector< block_q6_K > & | q_weights, |

| std::vector< float > & | f32_weights, | ||

| size_t | total_num_elements, | ||

| int | log_first_n_blocks = 0 |

||

| ) |

Dequantizes a vector of Q6_K blocks to a vector of float32.

- Parameters

-

q_weights Input vector of Q6_K blocks f32_weights Output vector of float32 values (will be resized) total_num_elements Total number of float elements expected after dequantization log_first_n_blocks Number of initial blocks to log dequantization details for (0 for no logging)

Definition at line 1054 of file quantization.cpp.

References dequantize_q6_k(), Logger::error(), GGML_QK_K, and Logger::warning().

Referenced by TinyLlamaModel::ensure_down_proj_dequantized(), TinyLlamaModel::ensure_embed_tokens_dequantized(), TinyLlamaModel::ensure_gate_proj_dequantized(), TinyLlamaModel::ensure_k_proj_dequantized(), TinyLlamaModel::ensure_lm_head_dequantized(), TinyLlamaModel::ensure_o_proj_dequantized(), TinyLlamaModel::ensure_q_proj_dequantized(), TinyLlamaModel::ensure_up_proj_dequantized(), TinyLlamaModel::ensure_v_proj_dequantized(), and TinyLlamaModel::initialize_weights().

◆ dequantize_vector_q8_0_to_f32()

| void dequantize_vector_q8_0_to_f32 | ( | const std::vector< block_q8_0 > & | q_weights, |

| std::vector< float > & | f32_weights, | ||

| size_t | total_num_elements, | ||

| int | log_first_n_blocks = 0 |

||

| ) |

Dequantizes a vector of Q8_0 blocks to a vector of float32.

- Parameters

-

q_weights Input vector of Q8_0 blocks f32_weights Output vector of float32 values (will be resized) total_num_elements Total number of float elements expected after dequantization log_first_n_blocks Number of initial blocks to log dequantization details for (0 for no logging)

Definition at line 1165 of file quantization.cpp.

References dequantize_q8_0_block(), Logger::error(), GGML_QK8_0, and Logger::warning().

Referenced by TinyLlamaModel::ensure_down_proj_dequantized(), TinyLlamaModel::ensure_embed_tokens_dequantized(), TinyLlamaModel::ensure_gate_proj_dequantized(), TinyLlamaModel::ensure_k_proj_dequantized(), TinyLlamaModel::ensure_lm_head_dequantized(), TinyLlamaModel::ensure_o_proj_dequantized(), TinyLlamaModel::ensure_q_proj_dequantized(), TinyLlamaModel::ensure_up_proj_dequantized(), TinyLlamaModel::ensure_v_proj_dequantized(), and TinyLlamaModel::initialize_weights().

◆ fp16_to_fp32()

| float fp16_to_fp32 | ( | uint16_t | h, |

| bool | is_gguf_scale_field = false |

||

| ) |

Converts a 16-bit floating point number to 32-bit float.

- Parameters

-

h The 16-bit float value to convert is_gguf_scale_field Whether this value is from a GGUF scale field

- Returns

- The converted 32-bit float value

Definition at line 47 of file quantization.cpp.

Referenced by dequantize_q2_k(), dequantize_q3_k(), dequantize_q4_k_m(), dequantize_q6_k(), dequantize_q8_0_block(), dequantize_q8_k(), vec_dot_q4_k_q8_k_cpu(), and vec_dot_q6_k_q8_k_cpu().

◆ fp32_to_fp16()

| uint16_t fp32_to_fp16 | ( | float | f | ) |

Converts a 32-bit float to 16-bit floating point.

- Parameters

-

f The 32-bit float value to convert

- Returns

- The converted 16-bit float value

Definition at line 92 of file quantization.cpp.

Referenced by quantize_fp32_to_q8_K(), quantize_q4_k_m(), and quantize_q6_k().

◆ ggml_type_block_size()

| size_t ggml_type_block_size | ( | GGMLType | type | ) |

Gets the block size for a GGML type.

- Parameters

-

type The GGML type

- Returns

- Block size in elements

Definition at line 688 of file quantization.cpp.

References GGML_QK_K, GGML_TYPE_BF16, GGML_TYPE_F16, GGML_TYPE_F32, GGML_TYPE_I16, GGML_TYPE_I32, GGML_TYPE_I8, GGML_TYPE_Q2_K, GGML_TYPE_Q3_K, GGML_TYPE_Q4_0, GGML_TYPE_Q4_K, GGML_TYPE_Q6_K, and GGML_TYPE_Q8_0.

Referenced by load_gguf_meta().

◆ ggml_type_name()

| const char * ggml_type_name | ( | GGMLType | type | ) |

Gets the string name of a GGML type.

- Parameters

-

type The GGML type

- Returns

- String representation of the type

Definition at line 601 of file quantization.cpp.

References GGML_TYPE_BF16, GGML_TYPE_COUNT, GGML_TYPE_F16, GGML_TYPE_F32, GGML_TYPE_I16, GGML_TYPE_I32, GGML_TYPE_I8, GGML_TYPE_Q2_K, GGML_TYPE_Q3_K, GGML_TYPE_Q4_0, GGML_TYPE_Q4_1, GGML_TYPE_Q4_K, GGML_TYPE_Q5_0, GGML_TYPE_Q5_1, GGML_TYPE_Q5_K, GGML_TYPE_Q6_K, GGML_TYPE_Q8_0, GGML_TYPE_Q8_1, and GGML_TYPE_Q8_K.

Referenced by load_gguf_meta().

◆ ggml_type_size()

| size_t ggml_type_size | ( | GGMLType | type | ) |

Gets the size in bytes of a GGML type.

- Parameters

-

type The GGML type

- Returns

- Size in bytes

Definition at line 646 of file quantization.cpp.

References GGML_TYPE_BF16, GGML_TYPE_COUNT, GGML_TYPE_F16, GGML_TYPE_F32, GGML_TYPE_I16, GGML_TYPE_I32, GGML_TYPE_I8, GGML_TYPE_Q2_K, GGML_TYPE_Q3_K, GGML_TYPE_Q4_0, GGML_TYPE_Q4_K, GGML_TYPE_Q5_K, GGML_TYPE_Q6_K, GGML_TYPE_Q8_0, GGML_TYPE_Q8_1, and GGML_TYPE_Q8_K.

Referenced by load_gguf_meta().

◆ handle_i8_tensor()

| void handle_i8_tensor | ( | const void * | i8_data, |

| float * | f_data, | ||

| size_t | num_elements | ||

| ) |

Handles conversion of int8 tensor data to float32.

- Parameters

-

i8_data Input int8 data f_data Output float array num_elements Number of elements to convert

Definition at line 268 of file quantization.cpp.

◆ matvec_q4k_q8k_cpu()

| void matvec_q4k_q8k_cpu | ( | const std::vector< block_q4_K > & | mat_q4k, |

| const std::vector< block_q8_K > & | vec_q8k, | ||

| std::vector< float > & | out_f32, | ||

| int | rows, | ||

| int | cols, | ||

| bool | log_calls | ||

| ) |

Computes matrix-vector product between Q4_K matrix and Q8_K vector on CPU.

- Parameters

-

mat_q4k Q4_K matrix vec_q8k Q8_K vector out_f32 Output float vector rows Number of matrix rows cols Number of matrix columns log_calls Whether to log computation details

Definition at line 982 of file quantization.cpp.

References GGML_QK_K, and vec_dot_q4_k_q8_k_cpu().

◆ matvec_q6k_q8k_cpu()

| void matvec_q6k_q8k_cpu | ( | const std::vector< block_q6_K > & | mat_q6k, |

| const std::vector< block_q8_K > & | vec_q8k, | ||

| std::vector< float > & | out_f32, | ||

| int | rows, | ||

| int | cols, | ||

| bool | log_calls | ||

| ) |

Computes matrix-vector product between Q6_K matrix and Q8_K vector on CPU.

- Parameters

-

mat_q6k Q6_K matrix vec_q8k Q8_K vector out_f32 Output float vector rows Number of matrix rows cols Number of matrix columns log_calls Whether to log computation details

Definition at line 897 of file quantization.cpp.

References GGML_QK_K, and vec_dot_q6_k_q8_k_cpu().

◆ quantize_fp32_to_q8_K()

| std::vector< block_q8_K > quantize_fp32_to_q8_K | ( | const std::vector< float > & | f_data | ) |

Quantizes float32 data to Q8_K format.

- Parameters

-

f_data Input float vector

- Returns

- Vector of Q8_K blocks

Definition at line 719 of file quantization.cpp.

References block_q8_K::d, Logger::debug(), fp32_to_fp16(), GGML_QK_K, Q8K_SCALE_FACTOR, block_q8_K::qs, SAFE_MAX, and SAFE_MIN.

◆ quantize_q4_k_m()

| void quantize_q4_k_m | ( | const float * | f_data, |

| void * | q_data, | ||

| int | num_elements | ||

| ) |

Quantizes float32 data to Q4_K format.

- Parameters

-

f_data Input float array q_data Output quantized data num_elements Number of elements to quantize

Definition at line 276 of file quantization.cpp.

References block_q4_K::d, block_q4_K::dmin, fp32_to_fp16(), GGML_QK_K, GGUF_EPSILON, GGUF_SMALL_VAL, K_MIN_VALUES, K_SCALE_VALUES, Q4K_OFFSET, Q4K_SCALE_FACTOR, block_q4_K::qs, SAFE_MAX, SAFE_MIN, and block_q4_K::scales.

◆ quantize_q6_k()

| void quantize_q6_k | ( | const float * | f_data, |

| void * | q_data, | ||

| int | num_elements | ||

| ) |

Quantizes float32 data to Q6_K format.

- Parameters

-

f_data Input float array q_data Output quantized data num_elements Number of elements to quantize

Definition at line 549 of file quantization.cpp.

References block_q6_K::d, fp32_to_fp16(), GGML_QK_K, GGUF_EPSILON, Q6K_OFFSET, Q6K_SCALE_FACTOR, block_q6_K::qh, block_q6_K::ql, SAFE_MAX, SAFE_MIN, and block_q6_K::scales.

◆ vec_dot_q4_k_q8_k_cpu()

| float vec_dot_q4_k_q8_k_cpu | ( | int | n, |

| const std::vector< block_q4_K > & | x_vec, | ||

| const std::vector< block_q8_K > & | y_vec, | ||

| bool | log_this_call | ||

| ) |

Computes dot product between Q4_K and Q8_K vectors on CPU.

- Parameters

-

n Number of blocks x_vec Q4_K vector y_vec Q8_K vector log_this_call Whether to log computation details

- Returns

- Dot product result

Definition at line 922 of file quantization.cpp.

References Logger::debug(), fp16_to_fp32(), g_vec_dot_q4_k_q8_k_log_count, get_scale_min_indices_q4_K(), GGML_QK_K, K_MIN_VALUES, K_SCALE_VALUES, block_q4_K::qs, and block_q8_K::qs.

Referenced by matvec_q4k_q8k_cpu().

◆ vec_dot_q6_k_q8_k_cpu()

| float vec_dot_q6_k_q8_k_cpu | ( | int | n, |

| const std::vector< block_q6_K > & | x, | ||

| const std::vector< block_q8_K > & | y, | ||

| bool | log_this_call | ||

| ) |

Computes dot product between Q6_K and Q8_K vectors on CPU.

- Parameters

-

n Number of blocks x Q6_K vector y Q8_K vector log_this_call Whether to log computation details

- Returns

- Dot product result

Definition at line 772 of file quantization.cpp.

References block_q8_K::bsums, block_q6_K::d, Logger::debug(), fp16_to_fp32(), GGML_QK_K, block_q6_K::qh, block_q6_K::ql, block_q8_K::qs, and block_q6_K::scales.

Referenced by matvec_q6k_q8k_cpu().