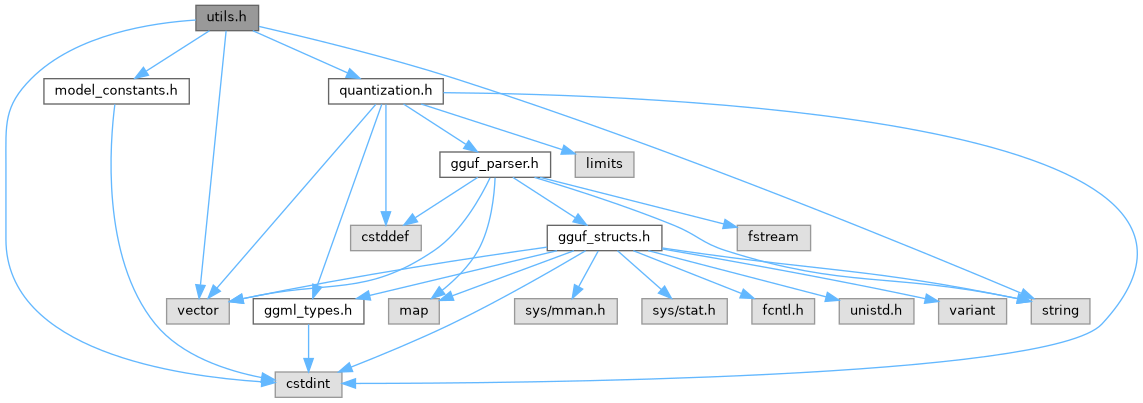

#include <vector>#include <string>#include <cstdint>#include "quantization.h"#include "model_constants.h"

Go to the source code of this file.

Functions | |

| float | simd_dot_product (const float *a, const float *b, int n) |

| void | simd_scaled_add (float *dst, const float *src, float scale, int n) |

| uint16_t | float32_to_bfloat16 (float val) |

| float | bfloat16_to_float32 (uint16_t bf16) |

| std::vector< float > | bfloat16_vector_to_float32 (const std::vector< uint16_t > &bf16_vec) |

| std::vector< uint16_t > | uint8_vector_to_uint16_vector (const std::vector< uint8_t > &bytes, size_t numel) |

| int | argmax (const std::vector< float > &v) |

| void | matvec_q6k_f32_vector_cpu (const std::vector< block_q6_K > &mat_q6k, const std::vector< float > &vec_f32, std::vector< float > &out_f32, int rows, int cols, bool log_first_block=false) |

| void | matvec_q4k_f32_vector_cpu (const std::vector< block_q4_K > &mat_q4k, const std::vector< float > &vec_f32, std::vector< float > &out_f32, int rows, int cols, bool log_first_block=false) |

| void | matvec_q8_0_f32_vector_cpu (const std::vector< block_q8_0 > &mat_q8_0, const std::vector< float > &vec_f32, std::vector< float > &out_f32, int rows, int cols, bool log_first_block=false) |

| void | matvec_q8k_f32_vector_cpu (const std::vector< block_q8_K > &mat_q8k, const std::vector< float > &vec_f32, std::vector< float > &out_f32, int rows, int cols, bool log_first_block=false) |

| void | matvec_f32_f32_vector_cpu (const std::vector< float > &mat_f32, const std::vector< float > &vec_f32, std::vector< float > &out_f32, int rows, int cols) |

| void | matmul_q4k_f32_batch_cpu (const std::vector< block_q4_K > &mat_q4k, const std::vector< float > &batch_input_activations, std::vector< float > &batch_output_activations, int num_tokens, int output_dim, int input_dim) |

| void | matmul_q6k_f32_batch_cpu (const std::vector< block_q6_K > &mat_q6k, const std::vector< float > &batch_input_activations, std::vector< float > &batch_output_activations, int num_tokens, int output_dim, int input_dim) |

| void | matmul_q8_0_f32_batch_cpu (const std::vector< block_q8_0 > &mat_q8_0, const std::vector< float > &batch_input_activations, std::vector< float > &batch_output_activations, int num_tokens, int output_dim, int input_dim) |

| void | matmul_q8k_f32_batch_cpu (const std::vector< block_q8_K > &mat_q8k, const std::vector< float > &batch_input_activations, std::vector< float > &batch_output_activations, int num_tokens, int output_dim, int input_dim) |

| void | apply_rope_vector (std::vector< float > &x, int num_heads, int head_dim, int current_token_pos, const std::vector< std::pair< float, float > > &all_freqs_cis, int max_pos_embeddings, bool use_adjacent_pairing) |

| void | apply_rope_batch_cpu (std::vector< float > &q_batch, std::vector< float > &k_batch, int num_tokens, int num_q_heads, int num_kv_heads, int head_dim, int start_pos_in_sequence, const std::vector< std::pair< float, float > > &all_freqs_cis, int max_pos_embeddings, bool use_adjacent_pairing) |

| void | rmsnorm_batch_cpu (const std::vector< float > &x_batch, const std::vector< float > &weight, std::vector< float > &out_batch, int num_tokens, int hidden_size, float eps=numeric::DEFAULT_EPS) |

| void | rmsnorm_vector_cpu (const std::vector< float > &x, const std::vector< float > &weight, std::vector< float > &out, float eps=numeric::DEFAULT_EPS) |

| void | softmax_vector_cpu (const std::vector< float > &x, std::vector< float > &out) |

| void | silu_cpu (const std::vector< float > &x, std::vector< float > &out) |

| void | matmul_f32_f32_batch_cpu (const std::vector< float > &mat_weights, const std::vector< float > &batch_input_activations, std::vector< float > &batch_output_activations, int num_tokens, int output_dim, int input_dim) |

| void | matvec_bf16_f32_vector_cpu (const std::vector< uint16_t > &mat_bf16, const std::vector< float > &vec_f32, std::vector< float > &out_f32, int rows, int cols) |

| void | weighted_sum_probs_v (const std::vector< float > &probs, const std::vector< float > &V, std::vector< float > &out, int seq_len, int head_dim) |

| void | calculate_attention_scores (const std::vector< float > &Q, const std::vector< float > &K, std::vector< float > &scores, int seq_len, int head_dim, float scale) |

| void | log_vector_summary (const std::string &name, const std::vector< float > &v, int head_count) |

| void | log_vector_summary_with_tail (const std::string &name, const std::vector< float > &v, int head_count, int tail_count) |

| void | log_vector_summary_detailed (const std::string &name, const std::vector< float > &v, int current_pos, int current_layer, int N=5) |

| void | log_vec_stats (const std::string &name, const std::vector< float > &v) |

| void | log_raw_float_pointer (const std::string &name, const float *ptr, size_t count=5) |

| bool | write_vector_to_file (const std::string &filename, const std::vector< float > &vec) |

| std::vector< std::vector< float > > | load_rmsnorm_bin (const std::string &filename, int num_tokens, int hidden_size) |

| std::vector< float > | bf16vec_to_float_vec (const std::vector< uint16_t > &v_bf16) |

| void | dequantize_q8_k (const std::vector< block_q8_K > &q8k_vec, std::vector< float > &out_f32, int n, bool log_this_block) |

Function Documentation

◆ apply_rope_batch_cpu()

| void apply_rope_batch_cpu | ( | std::vector< float > & | q_batch, |

| std::vector< float > & | k_batch, | ||

| int | num_tokens, | ||

| int | num_q_heads, | ||

| int | num_kv_heads, | ||

| int | head_dim, | ||

| int | start_pos_in_sequence, | ||

| const std::vector< std::pair< float, float > > & | all_freqs_cis, | ||

| int | max_pos_embeddings, | ||

| bool | use_adjacent_pairing | ||

| ) |

Definition at line 491 of file utils.cpp.

References Logger::error(), and Logger::warning().

Referenced by CPUBatchProcessor::forward_cpu_batch().

◆ apply_rope_vector()

| void apply_rope_vector | ( | std::vector< float > & | x, |

| int | num_heads, | ||

| int | head_dim, | ||

| int | current_token_pos, | ||

| const std::vector< std::pair< float, float > > & | all_freqs_cis, | ||

| int | max_pos_embeddings, | ||

| bool | use_adjacent_pairing | ||

| ) |

Definition at line 428 of file utils.cpp.

References Logger::error(), and Logger::warning().

Referenced by TinyLlamaModel::forward(), CPUBatchProcessor::forward_cpu_batch(), and TinyLlamaModel::forward_cpu_batch_generation().

◆ argmax()

| int argmax | ( | const std::vector< float > & | v | ) |

◆ bf16vec_to_float_vec()

| std::vector< float > bf16vec_to_float_vec | ( | const std::vector< uint16_t > & | v_bf16 | ) |

Definition at line 198 of file utils.cpp.

References bfloat16_to_float32().

Referenced by TinyLlamaModel::ensure_down_proj_dequantized(), TinyLlamaModel::ensure_embed_tokens_dequantized(), TinyLlamaModel::ensure_gate_proj_dequantized(), TinyLlamaModel::ensure_k_proj_dequantized(), TinyLlamaModel::ensure_lm_head_dequantized(), TinyLlamaModel::ensure_o_proj_dequantized(), TinyLlamaModel::ensure_q_proj_dequantized(), TinyLlamaModel::ensure_up_proj_dequantized(), TinyLlamaModel::ensure_v_proj_dequantized(), TinyLlamaModel::forward(), CPUBatchProcessor::forward_cpu_batch(), TinyLlamaModel::forward_cpu_batch_generation(), TinyLlamaModel::forward_cpu_logits_batch(), TinyLlamaModel::initialize_gpu_and_rope(), TinyLlamaModel::initialize_weights(), and TinyLlamaModel::lookup_embedding().

◆ bfloat16_to_float32()

| float bfloat16_to_float32 | ( | uint16_t | bf16 | ) |

Definition at line 144 of file utils.cpp.

Referenced by bf16vec_to_float_vec(), bfloat16_vector_to_float32(), and matvec_bf16_f32_vector_cpu().

◆ bfloat16_vector_to_float32()

| std::vector< float > bfloat16_vector_to_float32 | ( | const std::vector< uint16_t > & | bf16_vec | ) |

Definition at line 165 of file utils.cpp.

References bfloat16_to_float32().

◆ calculate_attention_scores()

| void calculate_attention_scores | ( | const std::vector< float > & | Q, |

| const std::vector< float > & | K, | ||

| std::vector< float > & | scores, | ||

| int | seq_len, | ||

| int | head_dim, | ||

| float | scale | ||

| ) |

Definition at line 1091 of file utils.cpp.

References attention::ATTENTION_SCALE_BASE, attention::MAX_SCALE, and attention::MIN_SCALE.

◆ dequantize_q8_k()

| void dequantize_q8_k | ( | const std::vector< block_q8_K > & | q8k_vec, |

| std::vector< float > & | out_f32, | ||

| int | n, | ||

| bool | log_this_block | ||

| ) |

Definition at line 1009 of file quantization.cpp.

References block_q8_K::d, Logger::debug(), fp16_to_fp32(), GGML_QK_K, and block_q8_K::qs.

Referenced by TinyLlamaModel::ensure_down_proj_dequantized(), TinyLlamaModel::ensure_embed_tokens_dequantized(), TinyLlamaModel::ensure_gate_proj_dequantized(), TinyLlamaModel::ensure_k_proj_dequantized(), TinyLlamaModel::ensure_lm_head_dequantized(), TinyLlamaModel::ensure_o_proj_dequantized(), TinyLlamaModel::ensure_q_proj_dequantized(), TinyLlamaModel::ensure_up_proj_dequantized(), TinyLlamaModel::ensure_v_proj_dequantized(), TinyLlamaModel::initialize_weights(), and matvec_q8k_f32_vector_cpu().

◆ float32_to_bfloat16()

| uint16_t float32_to_bfloat16 | ( | float | val | ) |

Definition at line 136 of file utils.cpp.

Referenced by TinyLlamaModel::initialize_gpu_and_rope().

◆ load_rmsnorm_bin()

| std::vector< std::vector< float > > load_rmsnorm_bin | ( | const std::string & | filename, |

| int | num_tokens, | ||

| int | hidden_size | ||

| ) |

Definition at line 1157 of file utils.cpp.

◆ log_raw_float_pointer()

| void log_raw_float_pointer | ( | const std::string & | name, |

| const float * | ptr, | ||

| size_t | count = 5 |

||

| ) |

Definition at line 1175 of file utils.cpp.

References Logger::info().

◆ log_vec_stats()

| void log_vec_stats | ( | const std::string & | name, |

| const std::vector< float > & | v | ||

| ) |

Definition at line 1119 of file utils.cpp.

References Logger::info().

◆ log_vector_summary()

| void log_vector_summary | ( | const std::string & | name, |

| const std::vector< float > & | v, | ||

| int | head_count | ||

| ) |

Definition at line 207 of file utils.cpp.

◆ log_vector_summary_detailed()

| void log_vector_summary_detailed | ( | const std::string & | name, |

| const std::vector< float > & | v, | ||

| int | current_pos, | ||

| int | current_layer, | ||

| int | N = 5 |

||

| ) |

Definition at line 1190 of file utils.cpp.

References Logger::info(), and SAFE_MIN.

◆ log_vector_summary_with_tail()

| void log_vector_summary_with_tail | ( | const std::string & | name, |

| const std::vector< float > & | v, | ||

| int | head_count, | ||

| int | tail_count | ||

| ) |

Definition at line 234 of file utils.cpp.

References Logger::info(), and SAFE_MIN.

◆ matmul_f32_f32_batch_cpu()

| void matmul_f32_f32_batch_cpu | ( | const std::vector< float > & | mat_weights, |

| const std::vector< float > & | batch_input_activations, | ||

| std::vector< float > & | batch_output_activations, | ||

| int | num_tokens, | ||

| int | output_dim, | ||

| int | input_dim | ||

| ) |

Definition at line 709 of file utils.cpp.

References Logger::error().

Referenced by CPUBatchProcessor::forward_cpu_batch(), TinyLlamaModel::forward_cpu_batch_generation(), and TinyLlamaModel::forward_cpu_logits_batch().

◆ matmul_q4k_f32_batch_cpu()

| void matmul_q4k_f32_batch_cpu | ( | const std::vector< block_q4_K > & | mat_q4k, |

| const std::vector< float > & | batch_input_activations, | ||

| std::vector< float > & | batch_output_activations, | ||

| int | num_tokens, | ||

| int | output_dim, | ||

| int | input_dim | ||

| ) |

Definition at line 988 of file utils.cpp.

References Logger::error(), and matvec_q4k_f32_vector_cpu().

Referenced by CPUBatchProcessor::forward_cpu_batch(), TinyLlamaModel::forward_cpu_batch_generation(), and TinyLlamaModel::forward_cpu_logits_batch().

◆ matmul_q6k_f32_batch_cpu()

| void matmul_q6k_f32_batch_cpu | ( | const std::vector< block_q6_K > & | mat_q6k, |

| const std::vector< float > & | batch_input_activations, | ||

| std::vector< float > & | batch_output_activations, | ||

| int | num_tokens, | ||

| int | output_dim, | ||

| int | input_dim | ||

| ) |

Definition at line 950 of file utils.cpp.

References Logger::error(), and matvec_q6k_f32_vector_cpu().

Referenced by CPUBatchProcessor::forward_cpu_batch(), TinyLlamaModel::forward_cpu_batch_generation(), and TinyLlamaModel::forward_cpu_logits_batch().

◆ matmul_q8_0_f32_batch_cpu()

| void matmul_q8_0_f32_batch_cpu | ( | const std::vector< block_q8_0 > & | mat_q8_0, |

| const std::vector< float > & | batch_input_activations, | ||

| std::vector< float > & | batch_output_activations, | ||

| int | num_tokens, | ||

| int | output_dim, | ||

| int | input_dim | ||

| ) |

Definition at line 869 of file utils.cpp.

References Logger::error(), and matvec_q8_0_f32_vector_cpu().

Referenced by CPUBatchProcessor::forward_cpu_batch(), TinyLlamaModel::forward_cpu_batch_generation(), and TinyLlamaModel::forward_cpu_logits_batch().

◆ matmul_q8k_f32_batch_cpu()

| void matmul_q8k_f32_batch_cpu | ( | const std::vector< block_q8_K > & | mat_q8k, |

| const std::vector< float > & | batch_input_activations, | ||

| std::vector< float > & | batch_output_activations, | ||

| int | num_tokens, | ||

| int | output_dim, | ||

| int | input_dim | ||

| ) |

Definition at line 907 of file utils.cpp.

References GGML_QK_K, and matvec_q8k_f32_vector_cpu().

◆ matvec_bf16_f32_vector_cpu()

| void matvec_bf16_f32_vector_cpu | ( | const std::vector< uint16_t > & | mat_bf16, |

| const std::vector< float > & | vec_f32, | ||

| std::vector< float > & | out_f32, | ||

| int | rows, | ||

| int | cols | ||

| ) |

Definition at line 1025 of file utils.cpp.

References bfloat16_to_float32(), and Logger::error().

Referenced by TinyLlamaModel::forward().

◆ matvec_f32_f32_vector_cpu()

| void matvec_f32_f32_vector_cpu | ( | const std::vector< float > & | mat_f32, |

| const std::vector< float > & | vec_f32, | ||

| std::vector< float > & | out_f32, | ||

| int | rows, | ||

| int | cols | ||

| ) |

Definition at line 349 of file utils.cpp.

References Logger::error().

Referenced by TinyLlamaModel::forward(), and matvec_q8k_f32_vector_cpu().

◆ matvec_q4k_f32_vector_cpu()

| void matvec_q4k_f32_vector_cpu | ( | const std::vector< block_q4_K > & | mat_q4k, |

| const std::vector< float > & | vec_f32, | ||

| std::vector< float > & | out_f32, | ||

| int | rows, | ||

| int | cols, | ||

| bool | log_first_block = false |

||

| ) |

Definition at line 816 of file utils.cpp.

References dequantize_q4_k_m(), and GGML_QK_K.

Referenced by TinyLlamaModel::forward(), and matmul_q4k_f32_batch_cpu().

◆ matvec_q6k_f32_vector_cpu()

| void matvec_q6k_f32_vector_cpu | ( | const std::vector< block_q6_K > & | mat_q6k, |

| const std::vector< float > & | vec_f32, | ||

| std::vector< float > & | out_f32, | ||

| int | rows, | ||

| int | cols, | ||

| bool | log_first_block = false |

||

| ) |

Definition at line 763 of file utils.cpp.

References dequantize_q6_k(), and GGML_QK_K.

Referenced by TinyLlamaModel::forward(), and matmul_q6k_f32_batch_cpu().

◆ matvec_q8_0_f32_vector_cpu()

| void matvec_q8_0_f32_vector_cpu | ( | const std::vector< block_q8_0 > & | mat_q8_0, |

| const std::vector< float > & | vec_f32, | ||

| std::vector< float > & | out_f32, | ||

| int | rows, | ||

| int | cols, | ||

| bool | log_first_block = false |

||

| ) |

Definition at line 293 of file utils.cpp.

References dequantize_q8_0_block(), and GGML_QK8_0.

Referenced by TinyLlamaModel::forward(), and matmul_q8_0_f32_batch_cpu().

◆ matvec_q8k_f32_vector_cpu()

| void matvec_q8k_f32_vector_cpu | ( | const std::vector< block_q8_K > & | mat_q8k, |

| const std::vector< float > & | vec_f32, | ||

| std::vector< float > & | out_f32, | ||

| int | rows, | ||

| int | cols, | ||

| bool | log_first_block = false |

||

| ) |

Definition at line 399 of file utils.cpp.

References dequantize_q8_k(), GGML_QK_K, Logger::info(), and matvec_f32_f32_vector_cpu().

Referenced by TinyLlamaModel::forward(), and matmul_q8k_f32_batch_cpu().

◆ rmsnorm_batch_cpu()

| void rmsnorm_batch_cpu | ( | const std::vector< float > & | x_batch, |

| const std::vector< float > & | weight, | ||

| std::vector< float > & | out_batch, | ||

| int | num_tokens, | ||

| int | hidden_size, | ||

| float | eps = numeric::DEFAULT_EPS |

||

| ) |

Definition at line 613 of file utils.cpp.

References Logger::error(), and SAFE_SQRT.

Referenced by CPUBatchProcessor::forward_cpu_batch(), TinyLlamaModel::forward_cpu_batch_generation(), and TinyLlamaModel::forward_cpu_logits_batch().

◆ rmsnorm_vector_cpu()

| void rmsnorm_vector_cpu | ( | const std::vector< float > & | x, |

| const std::vector< float > & | weight, | ||

| std::vector< float > & | out, | ||

| float | eps = numeric::DEFAULT_EPS |

||

| ) |

Definition at line 648 of file utils.cpp.

References Logger::error(), numeric::MIN_NORM_EPS, SAFE_MAX, and SAFE_SQRT.

Referenced by TinyLlamaModel::forward().

◆ silu_cpu()

| void silu_cpu | ( | const std::vector< float > & | x, |

| std::vector< float > & | out | ||

| ) |

Definition at line 700 of file utils.cpp.

Referenced by TinyLlamaModel::forward().

◆ simd_dot_product()

| float simd_dot_product | ( | const float * | a, |

| const float * | b, | ||

| int | n | ||

| ) |

Definition at line 35 of file utils.cpp.

Referenced by TinyLlamaModel::forward_cpu_batch_generation().

◆ simd_scaled_add()

| void simd_scaled_add | ( | float * | dst, |

| const float * | src, | ||

| float | scale, | ||

| int | n | ||

| ) |

Definition at line 92 of file utils.cpp.

Referenced by TinyLlamaModel::forward_cpu_batch_generation().

◆ softmax_vector_cpu()

| void softmax_vector_cpu | ( | const std::vector< float > & | x, |

| std::vector< float > & | out | ||

| ) |

Definition at line 675 of file utils.cpp.

Referenced by attention_batch_cpu(), attention_batch_cpu_sequence_aware(), TinyLlamaModel::forward(), and TinyLlamaModel::forward_cpu_batch_generation().

◆ uint8_vector_to_uint16_vector()

| std::vector< uint16_t > uint8_vector_to_uint16_vector | ( | const std::vector< uint8_t > & | bytes, |

| size_t | numel | ||

| ) |

◆ weighted_sum_probs_v()

| void weighted_sum_probs_v | ( | const std::vector< float > & | probs, |

| const std::vector< float > & | V, | ||

| std::vector< float > & | out, | ||

| int | seq_len, | ||

| int | head_dim | ||

| ) |

Definition at line 1060 of file utils.cpp.

References Logger::error().

◆ write_vector_to_file()

| bool write_vector_to_file | ( | const std::string & | filename, |

| const std::vector< float > & | vec | ||

| ) |

Definition at line 1134 of file utils.cpp.

References Logger::error(), and Logger::info().