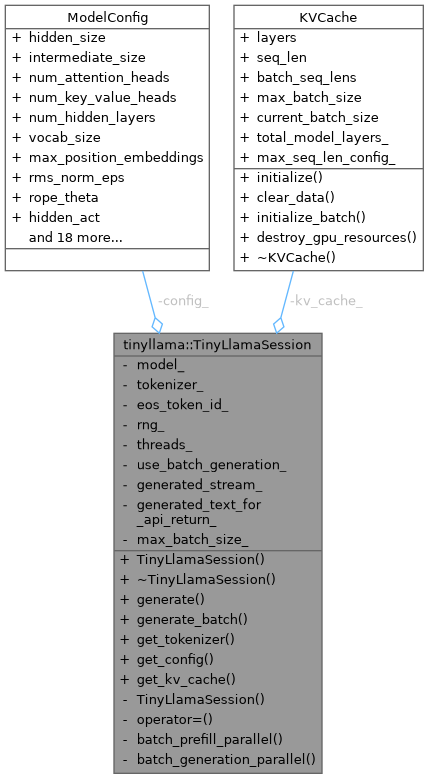

Represents an active TinyLlama session holding the loaded model and tokenizer. More...

#include <api.h>

Public Member Functions | |

| TinyLlamaSession (const std::string &model_path, const std::string &tokenizer_path, int threads=1, int num_gpu_layers_from_cli=0, bool cli_use_mmap=true, bool use_kv_quant=false, bool use_batch_generation=false, int max_batch_size=1) | |

| Loads the model, config, and tokenizer from the specified directory or GGUF file. | |

| ~TinyLlamaSession () | |

| Destructor to ensure proper cleanup (e.g., KVCache CUDA memory). | |

| std::string | generate (const std::string &prompt, int steps=128, float temperature=0.1f, int top_k=40, float top_p=0.9f, const std::string &system_prompt="", bool apply_q_a_format=false) |

| Generates text based on a given prompt. | |

| std::vector< std::string > | generate_batch (const std::vector< std::string > &prompts, int steps=128, float temperature=0.1f, int top_k=40, float top_p=0.9f, const std::string &system_prompt="", bool apply_q_a_format=false) |

| Generates text for multiple prompts in a single batch (parallel processing). | |

| const Tokenizer * | get_tokenizer () const |

| const ModelConfig & | get_config () const |

| KVCache & | get_kv_cache () |

Private Member Functions | |

| TinyLlamaSession (const TinyLlamaSession &)=delete | |

| TinyLlamaSession & | operator= (const TinyLlamaSession &)=delete |

| bool | batch_prefill_parallel (const std::vector< std::vector< int > > &all_tokens, const std::vector< int > &prompt_lengths, std::vector< std::vector< float > > &batch_final_logits) |

| bool | batch_generation_parallel (const std::vector< int > ¤t_tokens, const std::vector< int > &token_positions, const std::vector< int > &original_sequence_indices, std::vector< std::vector< float > > &batch_logits) |

Private Attributes | |

| std::unique_ptr< TinyLlamaModel > | model_ |

| std::unique_ptr< Tokenizer > | tokenizer_ |

| ModelConfig | config_ |

| KVCache | kv_cache_ |

| int | eos_token_id_ |

| std::mt19937 | rng_ {std::random_device{}()} |

| int | threads_ |

| bool | use_batch_generation_ |

| std::stringstream | generated_stream_ |

| std::string | generated_text_for_api_return_ |

| int | max_batch_size_ = 1 |

Detailed Description

Represents an active TinyLlama session holding the loaded model and tokenizer.

This class provides a high-level interface for text generation using TinyLlama models. It supports both GGUF and SafeTensors model formats, and handles model loading, tokenization, and text generation with various sampling strategies.

Constructor & Destructor Documentation

◆ TinyLlamaSession() [1/2]

| tinyllama::TinyLlamaSession::TinyLlamaSession | ( | const std::string & | model_path, |

| const std::string & | tokenizer_path, | ||

| int | threads = 1, |

||

| int | num_gpu_layers_from_cli = 0, |

||

| bool | cli_use_mmap = true, |

||

| bool | use_kv_quant = false, |

||

| bool | use_batch_generation = false, |

||

| int | max_batch_size = 1 |

||

| ) |

Loads the model, config, and tokenizer from the specified directory or GGUF file.

- Parameters

-

model_path Path to the directory containing model files OR a .gguf file. tokenizer_path Path to the tokenizer file. threads Number of threads to use for model loading. num_gpu_layers_from_cli Number of GPU layers to use from command-line arguments. cli_use_mmap Whether to use mmap for loading the model. use_kv_quant Whether to use INT8 quantization for the KVCache on GPU. use_batch_generation Whether to enable single-token batch generation. max_batch_size Maximum number of sequences for multi-prompt batch processing (default: 1).

- Exceptions

-

std::runtime_error if loading fails.

Definition at line 175 of file api.cpp.

References config_, ModelConfig::eos_token_id, eos_token_id_, ModelConfig::hidden_size, Logger::info(), KVCache::initialize(), ModelConfig::is_gguf_file_loaded, kv_cache_, ModelConfig::LLAMA3_TIKTOKEN, ModelConfig::LLAMA_SENTENCEPIECE, SafeTensorsLoader::load_model_config_from_json(), max_batch_size_, ModelConfig::max_position_embeddings, model_, ModelConfig::num_attention_heads, ModelConfig::num_cpu_offload_layers, ModelConfig::num_hidden_layers, ModelConfig::num_key_value_heads, tokenizer_, ModelConfig::tokenizer_family, ModelConfig::use_kvcache_quantization, ModelConfig::use_mmap_for_gguf, and Logger::warning().

◆ ~TinyLlamaSession()

| tinyllama::TinyLlamaSession::~TinyLlamaSession | ( | ) |

Destructor to ensure proper cleanup (e.g., KVCache CUDA memory).

Definition at line 429 of file api.cpp.

References Logger::info().

◆ TinyLlamaSession() [2/2]

|

privatedelete |

Member Function Documentation

◆ batch_generation_parallel()

|

private |

Definition at line 1367 of file api.cpp.

References config_, Logger::error(), ModelConfig::hidden_size, Logger::info(), kv_cache_, model_, ModelConfig::num_cpu_offload_layers, ModelConfig::num_hidden_layers, and Logger::warning().

Referenced by generate_batch().

◆ batch_prefill_parallel()

|

private |

Definition at line 1088 of file api.cpp.

References config_, Logger::error(), ModelConfig::hidden_size, Logger::info(), kv_cache_, model_, ModelConfig::num_cpu_offload_layers, ModelConfig::num_hidden_layers, and ModelConfig::vocab_size.

Referenced by generate_batch().

◆ generate()

| std::string tinyllama::TinyLlamaSession::generate | ( | const std::string & | prompt, |

| int | steps = 128, |

||

| float | temperature = 0.1f, |

||

| int | top_k = 40, |

||

| float | top_p = 0.9f, |

||

| const std::string & | system_prompt = "", |

||

| bool | apply_q_a_format = false |

||

| ) |

Generates text based on a given prompt.

This method supports various text generation strategies through its sampling parameters. For temperatures close to 0, it approaches deterministic/greedy sampling. For higher temperatures with top-k and top-p, it produces more diverse outputs.

The method can also apply Q:A formatting to the prompt, which is recommended for both GGUF and SafeTensors models when used via the command-line interface.

- Parameters

-

prompt The input prompt string. steps The number of tokens to generate. temperature Sampling temperature. Lower values (e.g., 0.1) make the output more focused and deterministic. top_k Top-K sampling parameter. Limits sampling to K most likely tokens. Set to 0 or vocab_size to disable. top_p Nucleus sampling parameter. Limits sampling to tokens comprising top P probability mass (0.0 to 1.0). system_prompt Optional system prompt to guide the generation. apply_q_a_format Whether to apply Q:A format ("Q: [prompt]\nA:"). Recommended true for command-line use.

- Returns

- The generated text string (excluding the prompt).

- Exceptions

-

std::runtime_error if generation fails.

Definition at line 433 of file api.cpp.

References KVCache::clear_data(), config_, Logger::debug(), Tokenizer::DEFAULT, eos_token_id_, Logger::error(), generated_stream_, generated_text_for_api_return_, ModelConfig::hidden_size, Logger::info(), kv_cache_, ModelConfig::LLAMA3_TIKTOKEN, ModelConfig::LLAMA_SENTENCEPIECE, tinyllama::log_vector_summary_detailed(), ModelConfig::max_position_embeddings, model_, ModelConfig::num_cpu_offload_layers, ModelConfig::num_hidden_layers, rng_, tinyllama::sample_top_k_top_p_temperature(), KVCache::seq_len, tokenizer_, ModelConfig::tokenizer_family, ModelConfig::vocab_size, and Logger::warning().

Referenced by generate_batch(), main(), and PYBIND11_MODULE().

◆ generate_batch()

| std::vector< std::string > tinyllama::TinyLlamaSession::generate_batch | ( | const std::vector< std::string > & | prompts, |

| int | steps = 128, |

||

| float | temperature = 0.1f, |

||

| int | top_k = 40, |

||

| float | top_p = 0.9f, |

||

| const std::string & | system_prompt = "", |

||

| bool | apply_q_a_format = false |

||

| ) |

Generates text for multiple prompts in a single batch (parallel processing).

This method processes multiple independent prompts simultaneously, providing significant efficiency gains compared to sequential generate() calls. Each prompt is processed independently with its own KV cache state.

- Parameters

-

prompts Vector of input prompt strings to process in batch. steps The number of tokens to generate for each prompt. temperature Sampling temperature. Lower values make output more focused and deterministic. top_k Top-K sampling parameter. Limits sampling to K most likely tokens. top_p Nucleus sampling parameter. Limits sampling to tokens comprising top P probability mass. system_prompt Optional system prompt applied to all prompts in the batch. apply_q_a_format Whether to apply Q:A format to all prompts in the batch.

- Returns

- Vector of generated text strings, one for each input prompt.

- Exceptions

-

std::runtime_error if batch generation fails or if prompts vector is empty.

Definition at line 780 of file api.cpp.

References batch_generation_parallel(), batch_prefill_parallel(), KVCache::clear_data(), config_, Tokenizer::DEFAULT, eos_token_id_, Logger::error(), generate(), Logger::info(), KVCache::initialize_batch(), kv_cache_, ModelConfig::LLAMA3_TIKTOKEN, ModelConfig::LLAMA_SENTENCEPIECE, max_batch_size_, model_, rng_, tinyllama::sample_top_k_top_p_temperature(), KVCache::seq_len, tokenizer_, ModelConfig::tokenizer_family, use_batch_generation_, ModelConfig::vocab_size, and Logger::warning().

Referenced by main(), and PYBIND11_MODULE().

◆ get_config()

|

inline |

◆ get_kv_cache()

|

inline |

◆ get_tokenizer()

|

inline |

◆ operator=()

|

privatedelete |

Member Data Documentation

◆ config_

|

private |

Definition at line 125 of file api.h.

Referenced by batch_generation_parallel(), batch_prefill_parallel(), generate(), generate_batch(), get_config(), and TinyLlamaSession().

◆ eos_token_id_

|

private |

Definition at line 127 of file api.h.

Referenced by generate(), generate_batch(), and TinyLlamaSession().

◆ generated_stream_

|

private |

Definition at line 131 of file api.h.

Referenced by generate().

◆ generated_text_for_api_return_

|

private |

Definition at line 132 of file api.h.

Referenced by generate().

◆ kv_cache_

|

private |

Definition at line 126 of file api.h.

Referenced by batch_generation_parallel(), batch_prefill_parallel(), generate(), generate_batch(), get_kv_cache(), and TinyLlamaSession().

◆ max_batch_size_

|

private |

Definition at line 135 of file api.h.

Referenced by generate_batch(), and TinyLlamaSession().

◆ model_

|

private |

Definition at line 123 of file api.h.

Referenced by batch_generation_parallel(), batch_prefill_parallel(), generate(), generate_batch(), and TinyLlamaSession().

◆ rng_

|

private |

Definition at line 128 of file api.h.

Referenced by generate(), and generate_batch().

◆ threads_

◆ tokenizer_

|

private |

Definition at line 124 of file api.h.

Referenced by generate(), generate_batch(), get_tokenizer(), and TinyLlamaSession().

◆ use_batch_generation_

|

private |

Definition at line 130 of file api.h.

Referenced by generate_batch().

The documentation for this class was generated from the following files: